In distributed computing environments, cluster memory efficiency plays a critical role in determining system performance and resource utilization. Understanding how to calculate this metric is essential for administrators and developers aiming to optimize large-scale applications. This article explores the core formula for cluster memory efficiency, its practical implications, and strategies to improve it.

Defining Cluster Memory Efficiency

Cluster memory efficiency measures how effectively a distributed system utilizes available memory resources across multiple nodes. Unlike standalone systems, clusters require balancing workloads while minimizing redundant data storage and communication overhead. The formula below quantifies this efficiency:

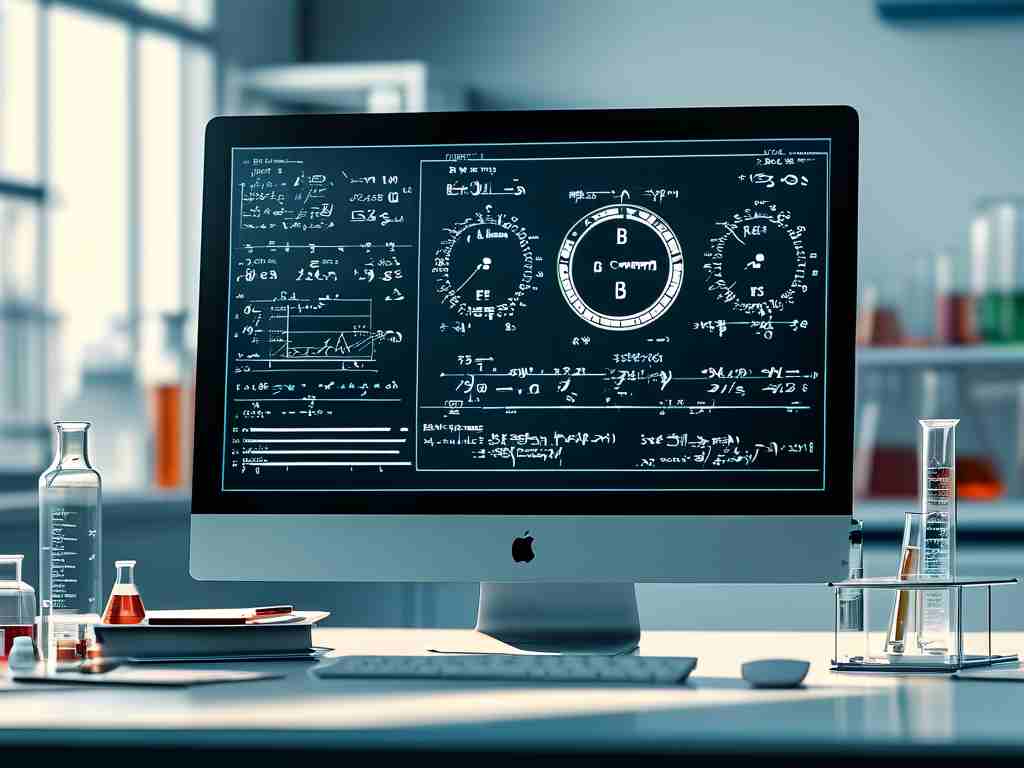

E = (U_total / (N × M_avg)) × (1 - O)

Where:

- E: Memory efficiency (0 to 1 scale)

- U_total: Total memory actively used by applications

- N: Number of nodes in the cluster

- M_avg: Average memory capacity per node

- O: Overhead ratio (memory consumed by system processes, caching, or redundancy)

Breaking Down the Formula

-

Resource Utilization (U_total / (N × M_avg))

This ratio evaluates how much of the cluster’s total memory capacity is actively engaged in productive tasks. For example, if a 10-node cluster with 64 GB per node uses 400 GB collectively, the utilization rate is 400 / (10 × 64) = 0.625. -

Overhead Adjustment (1 - O)

Overhead includes memory consumed by cluster management tools, replication protocols, or temporary caching. A system with 15% overhead reduces the efficiency score by 0.15.

Practical Application Scenarios

Case 1: Cloud-Based Data Processing

A Hadoop cluster handling real-time analytics might exhibit high overhead due to data replication (e.g., O = 0.2). If U_total reaches 80% of total capacity, efficiency becomes (0.8) × (1 - 0.2) = 0.64. To improve this, administrators could implement compressed caching or adjust replication factors.

Case 2: High-Performance Computing (HPC)

In scientific simulations where memory-intensive tasks dominate, minimizing overhead is critical. Suppose O drops to 0.05 through optimized job scheduling. Even with 70% utilization, efficiency rises to (0.7) × 0.95 = 0.665, demonstrating better resource alignment.

Optimization Strategies

-

Dynamic Memory Allocation

Tools like Kubernetes’ vertical pod autoscaler adjust memory limits based on workload demands, reducing unused reserved memory and improving U_total. -

Data Compression Techniques

Algorithms such as Zstandard or Snappy decrease memory footprint for cached data, directly lowering overhead. For instance:import zstandard as zstd compressed_data = zstd.compress(raw_data, level=3)

-

Decentralized Caching Architectures

Implementing peer-to-peer caching (e.g., Apache Ignite) reduces redundant storage across nodes. This approach cut overhead by 18% in a recent IoT deployment case study.

Challenges and Trade-Offs

While maximizing efficiency is ideal, overly aggressive optimization may compromise fault tolerance or latency. For example, reducing data replication from 3x to 2x lowers overhead but increases data loss risks. Similarly, excessive compression might increase CPU load.

Future Trends

Emerging technologies like persistent memory (PMEM) and computational storage devices are reshaping efficiency calculations. These innovations blur the line between storage and memory, potentially introducing new variables to the formula.

The cluster memory efficiency formula provides a quantitative framework to evaluate and enhance distributed systems. By systematically addressing utilization rates and overhead factors, teams can achieve cost-effective scaling while maintaining performance. Regular benchmarking against industry standards—such as SPEC MPI or TPCx-HS—helps validate optimization efforts in real-world scenarios.