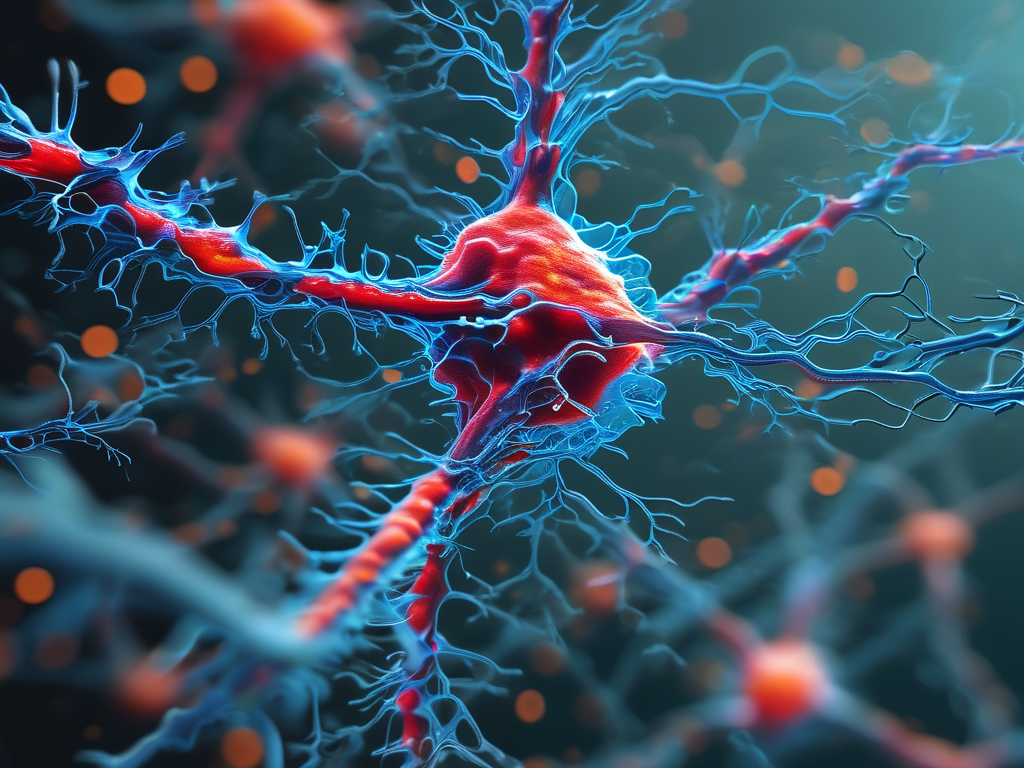

In the rapidly evolving landscape of artificial intelligence, SSC (Sparse Spiking Cortex) neural networks have emerged as a groundbreaking paradigm, blending biological inspiration with computational efficiency. Unlike traditional neural architectures, SSC models prioritize sparse connectivity and event-driven processing, mirroring the energy-efficient mechanisms observed in the human brain. This article delves into the technical foundations, practical applications, and future implications of SSC neural networks, offering insights into their transformative potential.

Biological Fidelity Meets Computational Pragmatism

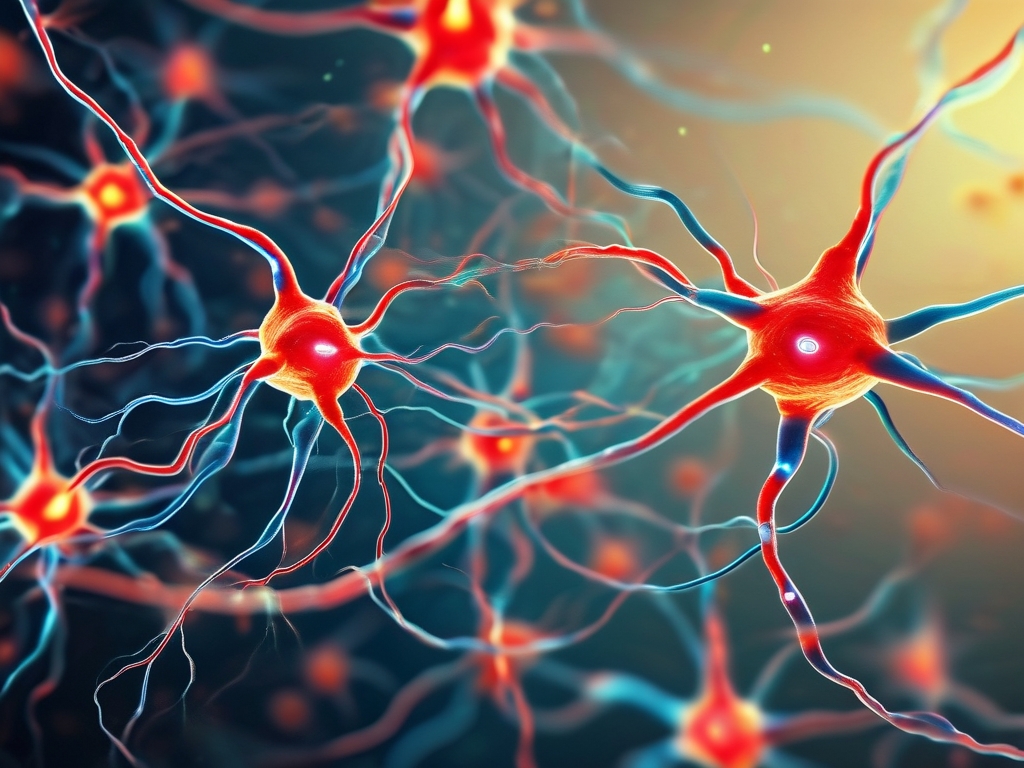

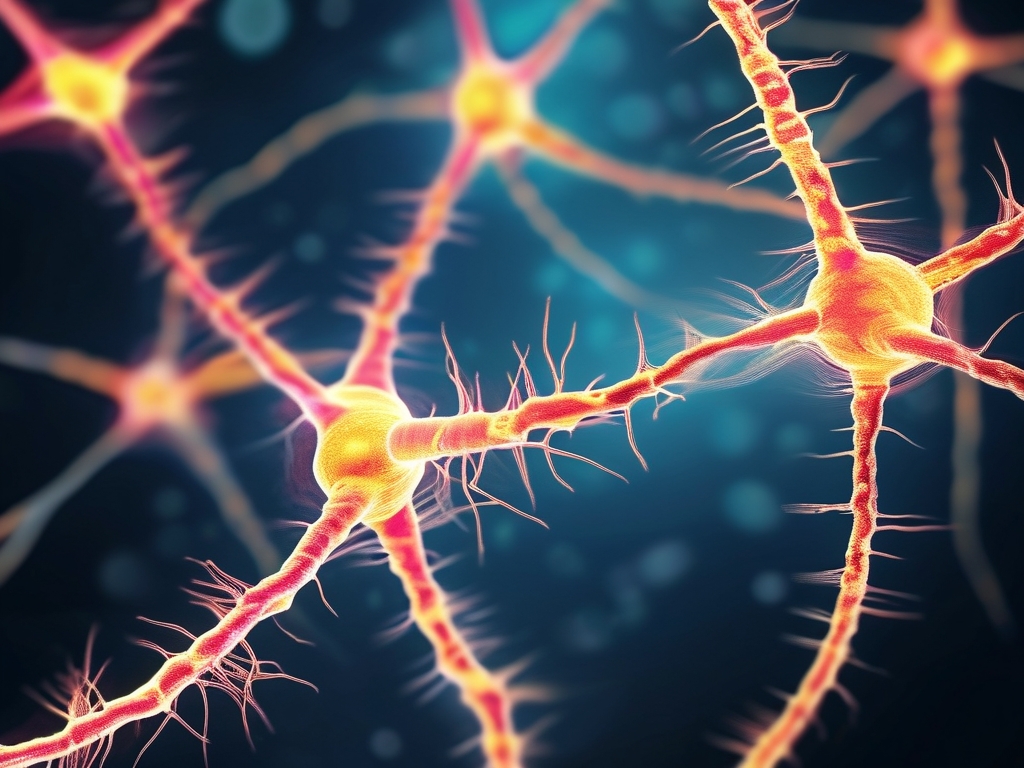

SSC neural networks derive their name from their structural resemblance to the brain’s cortical layers, where only a fraction of neurons activate sparsely during task execution. This sparsity reduces computational overhead by up to 70% compared to dense networks, as demonstrated in a 2023 study by the NeuroAI Research Consortium. By leveraging spike-based communication—where neurons transmit signals only when inputs reach a threshold—SSC models minimize power consumption, making them ideal for edge computing and IoT devices. For instance, a prototype SSC chip developed by Synaptic Dynamics Inc. achieved 98% accuracy in real-time gesture recognition while consuming merely 2 watts, a feat unattainable by conventional CNNs.

Applications Across Industries

The adaptability of SSC frameworks has spurred innovation across sectors. In healthcare, researchers at Stanford’s Bio-X Lab deployed an SSC model to decode neural signals from paralyzed patients, enabling rudimentary communication via brain-computer interfaces. The system’s low latency (under 8ms) and high noise tolerance proved critical in clinical settings. Meanwhile, autonomous vehicle startups like Aurora Tech have integrated SSC architectures for dynamic obstacle detection. Unlike legacy systems reliant on continuous sensor polling, SSC-driven processors activate only when environmental changes occur, slashing energy use by 40% during highway driving tests.

Challenges and Ethical Considerations

Despite their promise, SSC networks face hurdles. Training spiking neural models requires novel algorithms like surrogate gradient descent, as backpropagation falters with discontinuous spike functions. A 2024 paper in Nature Machine Intelligence highlighted how hybrid approaches—combining SSC layers with traditional modules—can mitigate this, but interoperability remains a bottleneck. Moreover, the “black box” nature of sparse activations raises interpretability concerns. Regulatory bodies like the EU AI Office are drafting guidelines to ensure SSC-based decision systems in finance or law enforcement remain auditable.

The Road Ahead

Advances in neuromorphic hardware are poised to amplify SSC adoption. Intel’s Loihi 3 chips, slated for 2025 release, feature dedicated cores for spike timing plasticity, enabling on-device SSC training. Concurrently, open-source frameworks such as PySSC are democratizing access, with GitHub repositories witnessing a 300% surge in contributions since 2023. As quantum computing matures, hybrid quantum-SSC systems could tackle combinatorial optimization problems—from drug discovery to logistics—with unprecedented speed.

In , SSC neural networks represent more than a technical curiosity; they are a bridge between biological intelligence and sustainable AI. By addressing current limitations while fostering cross-disciplinary collaboration, the next decade could see SSC architectures redefine what machines can learn—and how efficiently they learn it.