The fusion of artificial intelligence with code compilation has revolutionized software development, introducing adaptive systems that optimize program execution through machine learning. This article explores the foundational principles behind AI-enhanced compilers, their operational mechanics, and practical applications reshaping modern programming workflows.

1. Traditional Compilation vs. AI-Enhanced Approaches

Classical compilers follow deterministic rules to translate high-level code into machine instructions. Lexical analysis, syntax parsing, and register allocation operate through predefined algorithms. In contrast, AI-driven compilers employ neural networks to analyze code patterns, predict optimization opportunities, and adapt to hardware architectures dynamically.

For example, Google’s MLIR framework integrates reinforcement learning to optimize tensor operations for TPUs. By training on thousands of code variants, the system identifies context-specific optimizations that static compilers might overlook:

// Traditional loop optimization

for (int i=0; i<1000; i++) {

arr[i] = i * 2;

}

// AI-suggested vectorization

#pragma omp simd

for (int i=0; i<1000; i+=4) {

_mm256_store_ps(&arr[i], _mm256_mul_ps(_mm256_set1_ps(2.0), _mm256_load_ps(&base[i])));

}

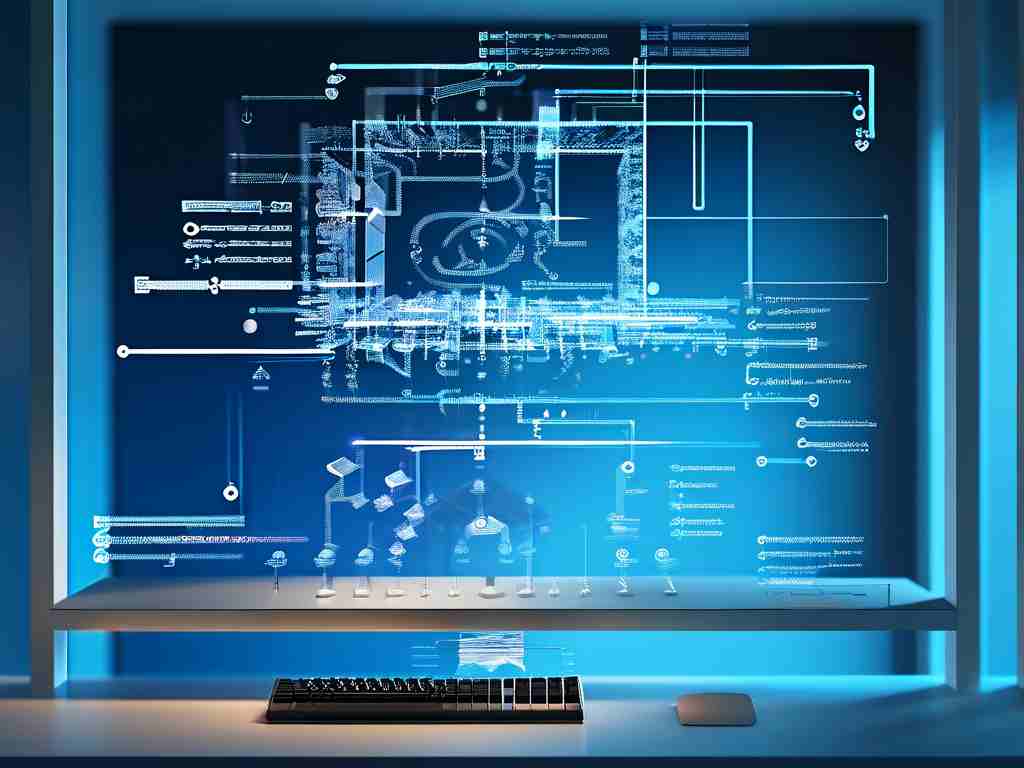

2. Neural Intermediate Representations

Modern AI compilers like Facebook’s AITemplate introduce neural intermediate representations (NIRs) that encode code semantics as graph structures. These graphs capture control flow, data dependencies, and memory access patterns, enabling transformers to predict optimal instruction scheduling. During training, models learn to map NIR subgraphs to latency/throughput metrics across GPU/CPU configurations.

Stanford researchers demonstrated a 23% performance gain in image processing pipelines by using graph attention networks to resolve memory bottlenecks in CUDA kernels. The AI compiler automatically inserted prefetch instructions and adjusted shared memory partitioning based on kernel dimensionality.

3. Challenges in AI-Based Compilation

While promising, AI-driven compilation faces three critical challenges:

- Data Dependency: Training requires extensive datasets of code-optimization pairs, which are scarce for niche hardware like quantum co-processors.

- Non-Determinism: Neural models may produce varying outputs for identical inputs, complicating debugging in safety-critical systems.

- Compilation Overhead: Model inference adds latency, making AI optimizations impractical for just-in-time (JIT) compilation scenarios.

MIT’s Cerebras project addresses these issues through hybrid architectures. A rule-based pre-optimizer handles 80% of routine tasks, while neural modules focus on edge cases, reducing model inference time by 40%.

4. Future Directions

Emerging techniques like differentiable compilation and hardware-aware NAS (neural architecture search) are pushing boundaries. Researchers at NVIDIA recently unveiled a compiler that co-optimizes PyTorch code and GPU microcode through gradient descent, achieving 2.1× speedups on transformer models.

Ethical considerations also emerge as AI compilers might inadvertently introduce biases. For instance, a model trained predominantly on x86 binaries could generate suboptimal ARM code, disadvantaging mobile developers. Ongoing efforts like the LLVM Foundation’s AI4Comp initiative aim to establish standardized evaluation benchmarks and fairness guidelines.

AI-powered code compilation represents a paradigm shift, blending program analysis with machine intelligence. While challenges persist in transparency and efficiency, early adopters are already witnessing measurable gains in cloud computing and embedded systems. As models evolve to handle polyglot programming and heterogeneous hardware, developers must adapt to tools that learn and reason about code as fluidly as humans—but at machine scale.