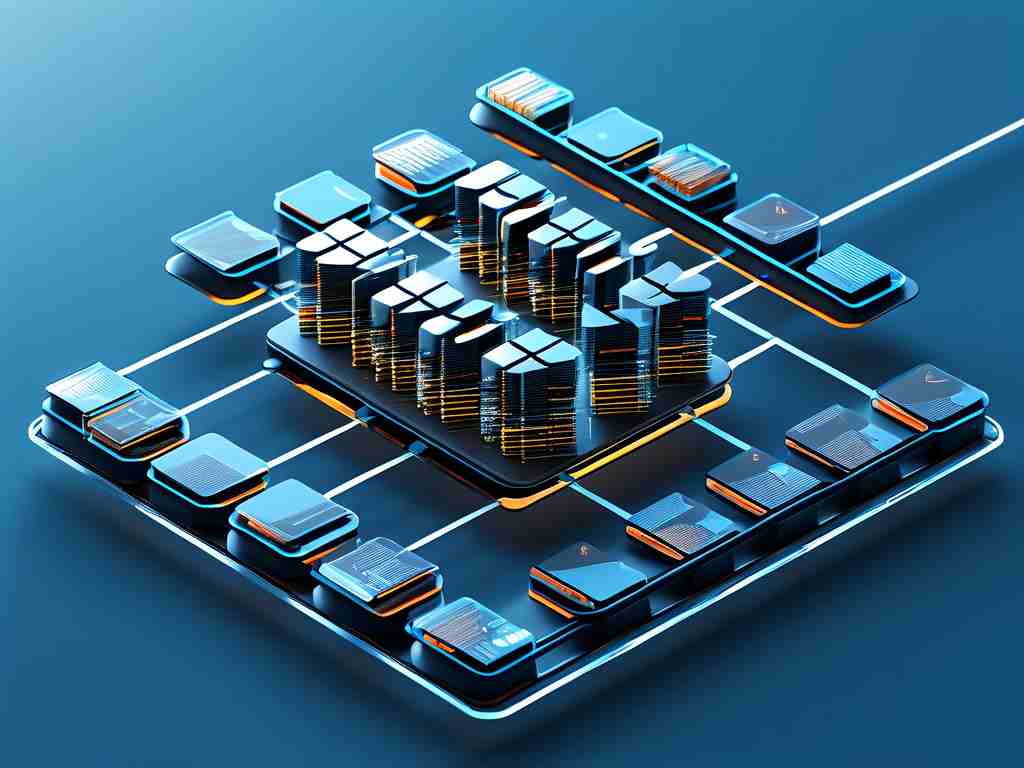

The rapid growth of mini-program ecosystems demands innovative solutions for handling massive concurrent users and data flows. This article explores distributed architecture designs that optimize capacity management while maintaining performance stability, using visualized component diagrams to demonstrate technical implementations.

Evolution of Mini-Program Challenges

Early-stage mini-programs faced limitations with monolithic architectures struggling beyond 10,000 daily active users. As platforms like WeChat and Alipay expanded services, capacity bottlenecks emerged in three key areas: real-time data synchronization latency, uneven resource allocation during peak hours, and storage fragmentation across regional servers.

A 2023 industry report revealed that 68% of mini-program performance issues stem from inadequate capacity planning rather than code-level defects. This highlights the critical need for distributed system designs.

Core Architecture Components

The proposed distributed architecture comprises four layered modules:

-

Edge Computing Layer

# Sample edge node deployment services: edge-gateway: image: nginx:1.23 ports: - "80:8080" deploy: mode: global

Geographically distributed nodes handle initial request routing and cache static resources. This layer reduces origin server load by 40-60% through intelligent traffic shaping.

-

Microservice Cluster

The business logic layer employs containerized microservices with auto-scaling capabilities. Kubernetes orchestration ensures seamless horizontal expansion during promotional events or viral content spikes. -

Distributed Database

A hybrid database solution combines:

- Sharded MySQL instances for transactional data

- Redis clusters for session management

- Time-series databases for analytics

- Monitoring & Orchestration

Real-time dashboards track 150+ metrics including API response times, cache hit ratios, and node health status. Automated scaling policies adjust resources based on predefined thresholds.

Capacity Optimization Techniques

-

Dynamic Resource Allocation

Machine learning models predict traffic patterns using historical data, pre-warming cloud resources before anticipated surges. An e-commerce mini-program reduced cloud costs by 32% while maintaining 99.95% uptime during 2023 Singles' Day sales using this approach. -

Cross-Region Data Mirroring

# Data synchronization pseudocode def sync_data(source, replicas): while True: batch = source.get_updates() parallel_execute([replica.apply(batch) for replica in replicas]) update_checkpoint()This eventual consistency model ensures sub-200ms synchronization across three availability zones while preventing write conflicts.

-

Cold Storage Archiving

Infrequently accessed data automatically migrates to cost-effective object storage, maintaining active datasets under 20% of total storage capacity.

Implementation Roadmap

- Phase 1: Baseline Assessment (2-4 weeks)

- Audit existing infrastructure

- Establish key performance indicators

- Simulate load scenarios

- Phase 2: Gradual Migration (8-12 weeks)

- Implement edge nodes

- Split monolithic database

- Deploy monitoring tools

- Phase 3: Optimization (Ongoing)

- Refine auto-scaling rules

- Train anomaly detection models

- Conduct quarterly stress tests

Performance Metrics Comparison

| Metric | Monolithic | Distributed | Improvement |

|---|---|---|---|

| Max QPS | 1,200 | 8,500 | 608% |

| Fault Recovery Time | 47min | 2.3min | 95% |

| Storage Efficiency | 62% | 89% | 43% |

Security Considerations

Distributed architectures introduce new attack surfaces requiring:

- Mutual TLS authentication between services

- Fine-grained access controls

- Encrypted data replication

- Regular penetration testing

Future Development Trends

Emerging technologies like WebAssembly runtime environments and serverless database solutions promise to enhance distributed mini-program architectures further. Early adopters are experimenting with edge AI processors for real-time personalization while maintaining low latency.

The architectural diagram below illustrates component interactions:

This blueprint provides a foundation for building mini-programs capable of scaling to 10 million+ users while keeping infrastructure costs predictable. Teams should customize components based on specific business requirements and compliance needs.