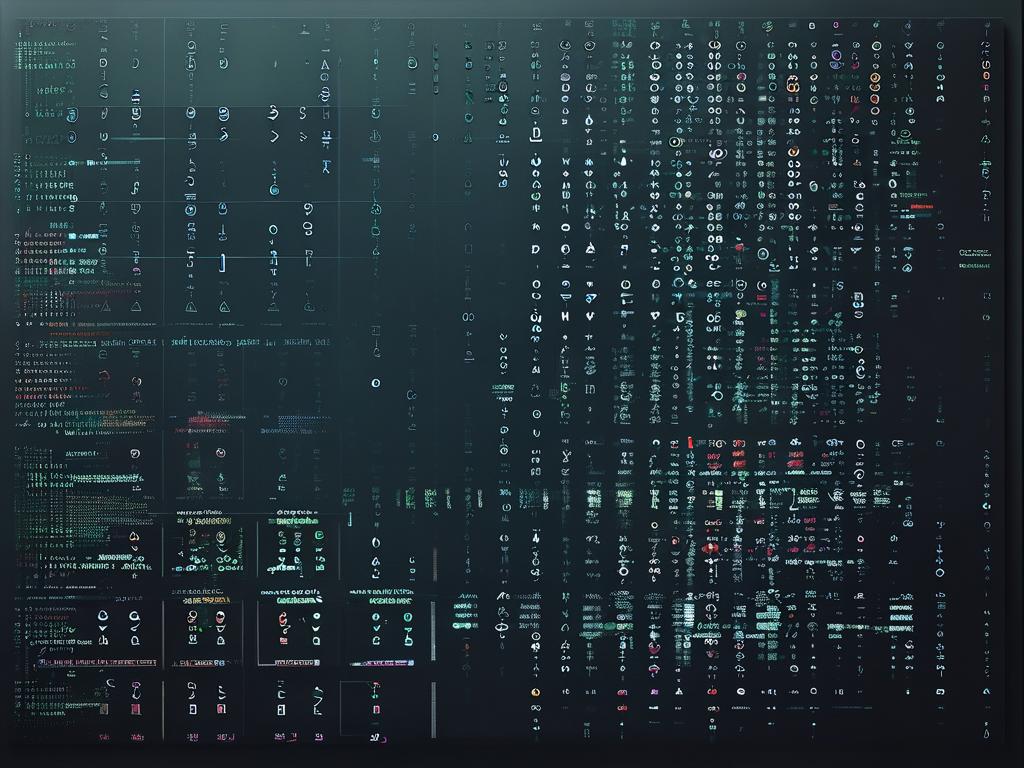

In the intricate landscape of software development, compiler design stands as a cornerstone of modern computing. At its core lies the systematic processing of symbols—the fundamental building blocks that bridge human-readable code and machine-executable instructions. This article explores how symbols drive language translation and enable compilers to transform abstract logic into functional programs.

The Role of Symbols in Compilation

Every compiler operates through distinct phases: lexical analysis, syntax parsing, semantic analysis, intermediate code generation, optimization, and code generation. Symbols emerge as atomic units during lexical analysis, where tokens like identifiers, keywords, and operators are identified. For instance, in a statement int x = 42;, the lexer recognizes int as a type symbol, x as an identifier, and = as an assignment operator.

Syntax analysis then arranges these symbols into hierarchical structures using context-free grammars. A parse tree or abstract syntax tree (AST) forms, validating whether symbol sequences adhere to language rules. Consider the expression a + b * c: the parser ensures multiplication (*) precedes addition (+) based on operator precedence symbols defined in the grammar.

Symbol Table Management

A compiler’s symbol table acts as a dynamic registry, storing identifiers with attributes like data type, scope, and memory location. This metadata enables semantic analysis to detect errors such as undeclared variables or type mismatches. Modern compilers often implement symbol tables using hash tables or hierarchical structures for efficient lookup.

struct SymbolEntry {

char* name;

int type;

int scope_level;

// Additional metadata

};

During nested scoping, compilers may employ stack-based symbol tables. For example, when entering a function block, a new scope layer is pushed onto the stack, allowing local variables to override global ones temporarily.

Intermediate Representation and Optimization

Symbols reappear in intermediate code (e.g., three-address code or LLVM IR), where complex expressions decompose into simpler operations. Optimizers then analyze these symbolic representations to eliminate redundancy. Constant propagation, for instance, replaces variables with known values:

%result = add i32 %a, 7 ; If %a is known to be 5, optimize to: %result = 12

Challenges in Symbol Handling

Real-world compilers face challenges like handling ambiguous symbols or managing large-scale symbol tables. The C++ "most vexing parse" exemplifies ambiguity, where Type name(); could declare a function or initialize an object. Resolving such cases requires contextual symbol analysis across multiple compilation units.

Debugging symbols present another layer of complexity. Tools like GDB rely on DWARF/PDB data mapping machine code to source-level symbols—a process demanding precise alignment between compiler output and original program structure.

Case Study: Cross-Language Interoperability

Modern systems increasingly require symbols to transcend language boundaries. Python’s C extensions demonstrate this, where Python symbol tables interact with C function pointers through CPython APIs. The compiler must ensure consistent symbol naming and memory management across differing type systems.

Future Directions

Emerging compiler technologies emphasize symbolic AI integration. Projects like Facebook’s Aroma use machine learning to suggest code completions by analyzing patterns in symbol usage. Meanwhile, WebAssembly’s portable symbol format enables near-native execution across platforms, redefining how symbols are processed in distributed environments.

From lexical tokens to optimized machine code, symbols form the connective tissue of compilation. Their effective management determines not just a compiler’s correctness, but also its ability to generate efficient, maintainable software. As programming paradigms evolve, so too will the strategies for symbol manipulation—ensuring compilers remain adaptable in an ever-changing technological landscape.