Large language models and AI systems are revolutionizing industries, from healthcare to finance, driving an unprecedented demand for powerful computing servers. At the heart of these servers lies memory, a critical component that dictates performance and efficiency. Understanding how much memory is needed for large model computing servers isn't just a technical detail; it's essential for organizations aiming to deploy cutting-edge AI solutions without bottlenecks. In this article, we'll explore the factors influencing memory requirements, provide real-world examples, and discuss optimization strategies to help you make informed decisions. Whether you're a tech enthusiast or a business leader, this insight could save costs and boost innovation in your AI projects.

The memory size for large model servers varies widely, primarily depending on the scale of the AI models being run. For instance, training massive neural networks like those with billions of parameters—such as GPT-4 or similar architectures—often requires terabytes of RAM. This is because during training, the server must store not only the model weights but also intermediate activations, gradients, and data batches. A common benchmark is that for a model with 100 billion parameters, you might need at least 1-2 TB of memory just to handle basic operations without swapping to slower storage. However, this is a rough estimate; actual needs can spike higher with complex tasks like fine-tuning or multi-modal inputs. As AI evolves, models are growing exponentially, pushing memory demands toward the petabyte range in specialized data centers.

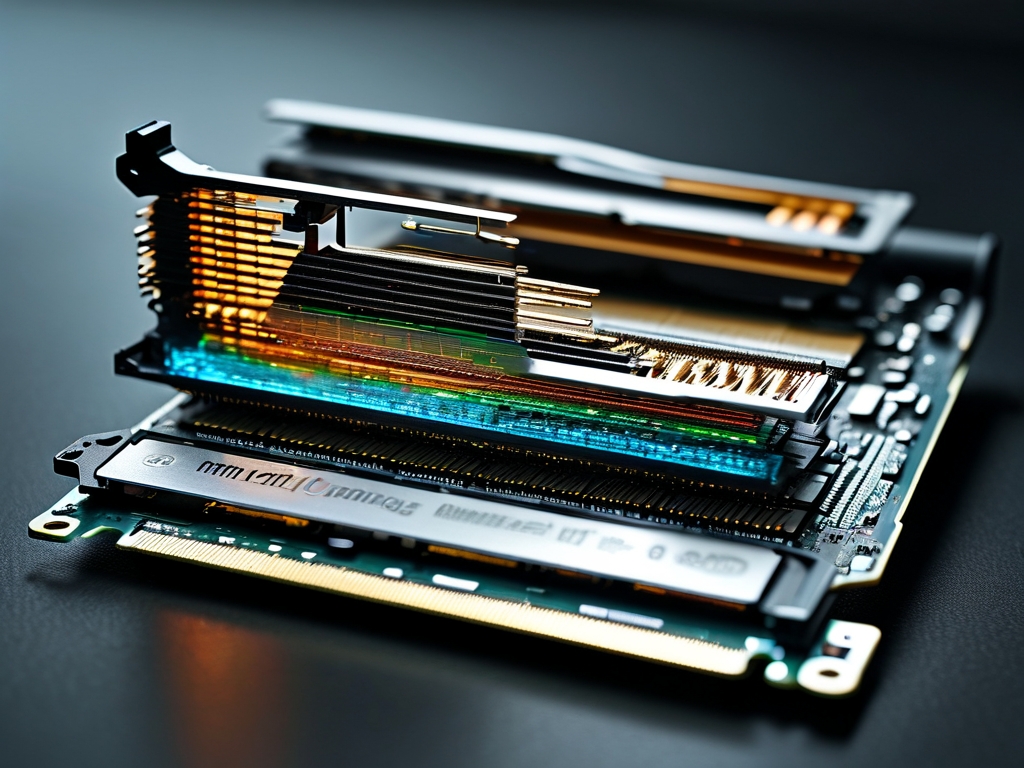

Several key factors influence the exact memory footprint. First, the model architecture itself plays a huge role. Transformer-based models, popular in NLP, are memory-intensive due to their attention mechanisms, which require storing large matrices. For example, a standard transformer layer might consume gigabytes per instance, and stacking dozens of layers amplifies this. Second, batch size during training or inference significantly affects RAM usage. Larger batches improve throughput but demand more memory to hold all data simultaneously—doubling the batch size can nearly double memory consumption. Third, hardware and software optimizations come into play. Using GPUs with high-bandwidth memory (HBM) like NVIDIA's A100 can reduce the need by accelerating computations, but if not configured properly, it might lead to underutilization. Additionally, frameworks such as PyTorch or TensorFlow add overhead; inefficient code can bloat memory usage by 10-20%. Consider this simple pseudocode snippet illustrating memory allocation in a training loop:

// Pseudocode for model training memory estimate

model = initialize_large_model(params)

data_batch = load_data(batch_size)

activations = forward_pass(model, data_batch) // Stores intermediate values

loss = compute_loss(activations)

gradients = backward_pass(loss) // Accumulates gradients

update_weights(model, gradients) // Requires memory for optimizationThis snippet shows how multiple components compete for RAM, emphasizing why even small inefficiencies can escalate costs. Beyond this, real-world deployments must account for system-level factors like operating system overhead, caching, and concurrent processes, which can add 10-30% extra memory. For instance, in a cloud server setup, virtualization layers might steal resources, necessitating over-provisioning.

Optimizing memory usage is crucial to manage costs and enhance performance. One effective strategy is model parallelism, where the model is split across multiple GPUs or servers, distributing the memory load. Techniques like gradient checkpointing—storing only key activations and recomputing others—can slash memory by up to 50% for large models. Another approach is quantization, reducing numerical precision from 32-bit to 16-bit or 8-bit, which cuts memory requirements substantially without major accuracy loss. For example, switching to mixed-precision training often halves RAM usage. Additionally, leveraging distributed training frameworks like Horovod or DeepSpeed enables efficient scaling across clusters, allowing smaller memory per node. In practice, companies like OpenAI use these methods to run models on servers with 512GB to 1TB RAM per GPU, achieving high efficiency. However, trade-offs exist; aggressive optimization might increase computation time or require specialized hardware, so balancing these is key for sustainable AI deployment.

Looking ahead, the trend toward even larger models suggests that memory demands will continue soaring. With advancements in areas like generative AI and real-time inference, servers may soon need petabytes of RAM for edge computing or autonomous systems. Innovations in memory technology, such as non-volatile RAM or advanced caching, could mitigate this, but for now, planning for scalability is vital. Ultimately, determining the right memory size involves assessing your specific use case—start with benchmarks, test iteratively, and monitor resources closely. By doing so, you'll not only future-proof your infrastructure but also drive faster, more reliable AI innovations.