The evolution of driving robot technology, often synonymous with autonomous vehicles, has revolutionized transportation by promising safer, more efficient mobility. However, rigorous testing is paramount to ensure these systems function flawlessly in real-world scenarios. Without comprehensive validation, the risks of accidents, system failures, or ethical dilemmas could undermine public trust and adoption. This article delves into practical methods for testing driving robot technology, emphasizing a holistic approach that blends simulation, real-world trials, and iterative refinement to achieve reliability.

To begin, simulation-based testing forms the bedrock of evaluating driving robots. Engineers leverage advanced software platforms like CARLA or Gazebo to create virtual environments that mimic diverse driving conditions—such as urban traffic, adverse weather, or unexpected obstacles. For instance, code snippets in Python using libraries like ROS (Robot Operating System) can simulate sensor inputs, allowing developers to assess how the robot processes data and makes decisions. A common approach involves scripting scenarios where the vehicle encounters pedestrians or sudden lane changes, enabling thousands of test runs in a controlled, risk-free setting. This not only accelerates development but also uncovers edge cases that might be overlooked in physical tests. Yet, simulations have limitations; they can't fully replicate the chaos of human behavior or unpredictable road events, highlighting the need for complementary real-world validation.

Moving to on-road testing, this phase transitions from virtual to tangible, where driving robots undergo trials in actual environments. Teams deploy prototypes on closed tracks or public roads, equipped with sensors like LiDAR and cameras to monitor performance. Key metrics include reaction times, obstacle avoidance accuracy, and adherence to traffic rules. For example, a test might involve the robot navigating a busy intersection during rush hour, with human supervisors ready to intervene if anomalies arise. Safety protocols are crucial here—such as implementing fail-safe mechanisms in code that trigger emergency stops when sensors detect imminent collisions. Real-world testing exposes nuances like sensor degradation in rain or GPS signal loss, forcing engineers to refine algorithms. However, it's resource-intensive and poses ethical concerns; incidents like Tesla's Autopilot challenges underscore the importance of incremental deployment, starting with low-risk areas before scaling.

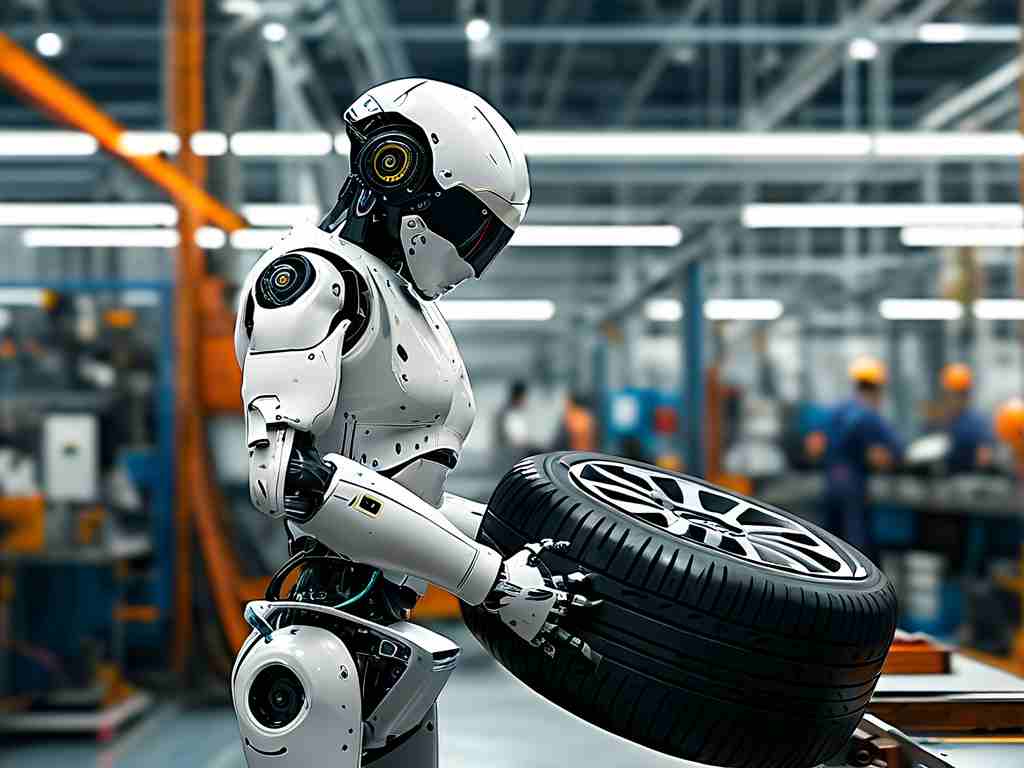

Beyond simulations and road trials, hardware-in-the-loop (HIL) testing bridges the gap by integrating physical components with virtual models. In this setup, actual vehicle parts—like brakes or steering systems—connect to simulated inputs, allowing for dynamic stress tests. Engineers might run scripts that simulate sudden mechanical failures, such as a tire blowout, to evaluate the robot's recovery protocols. Code snippets in C++ or MATLAB can automate these scenarios, providing real-time data on system resilience. This method is invaluable for validating safety-critical functions, ensuring that redundancies kick in seamlessly. Yet, HIL testing demands sophisticated labs and expertise, and it must evolve alongside regulatory standards, like those from NHTSA or ISO, to address cybersecurity threats where hackers could exploit vulnerabilities in autonomous systems.

The challenges in testing driving robot technology are multifaceted, spanning technical, ethical, and practical domains. Technically, achieving high-fidelity simulations requires immense computational power, while real-world tests face variability in traffic patterns and infrastructure. Ethically, questions arise about accountability in accidents—should the robot prioritize passenger safety over pedestrians? Practically, costs can soar, with extensive testing cycles delaying market entry. To overcome these, a best-practice framework involves cross-disciplinary collaboration, combining AI experts, ethicists, and policymakers to establish standardized test protocols. For instance, adopting V-model development ensures that testing aligns with design phases, catching flaws early.

In , testing driving robot technology demands a layered strategy that harnesses simulations for scalability, real-world trials for authenticity, and HIL setups for robustness. As this field advances, innovations like AI-driven anomaly detection will enhance efficiency, but human oversight remains irreplaceable. Ultimately, thorough testing not only mitigates risks but also accelerates the safe integration of autonomous systems into daily life, fostering a future where roads are smarter and accidents rarer. By embracing these methods, stakeholders can build trust and drive progress in this transformative technology.