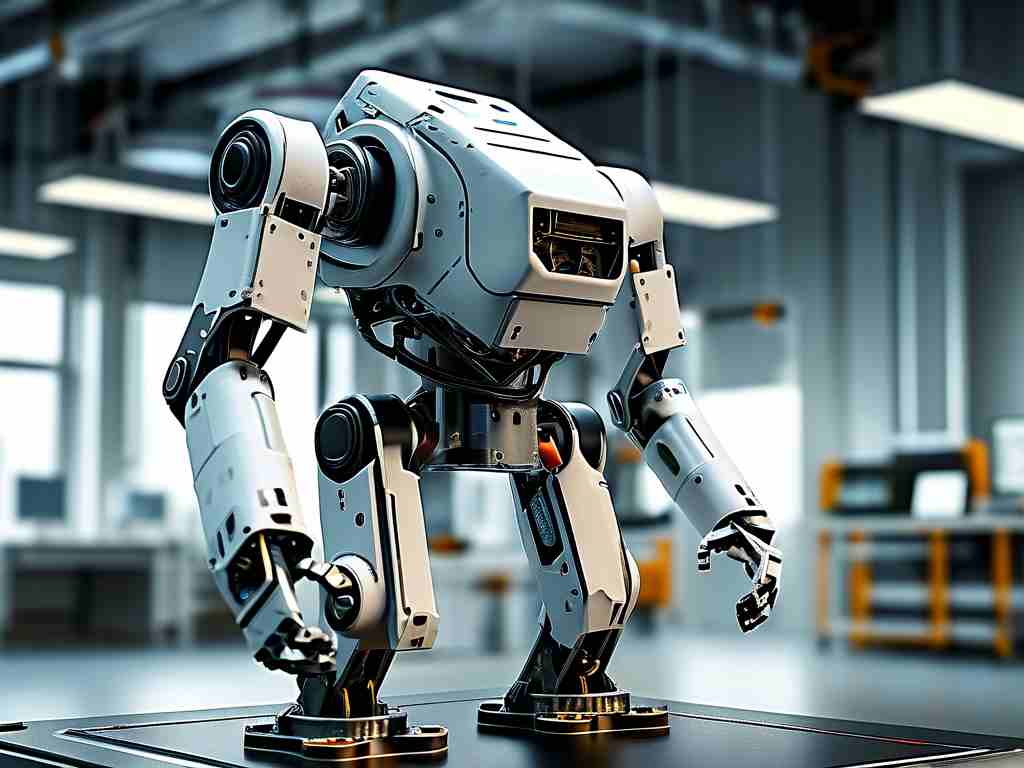

The allure of building your own robot – a unique blend of creativity, engineering, and programming – captivates countless hobbyists, students, and innovators. While accessible components and open-source software have significantly lowered the entry barrier, the journey from concept to a fully functional, autonomous machine is fraught with substantial technical challenges. Overcoming these hurdles requires not only passion but also a deep dive into multidisciplinary problem-solving. This article explores the core technical difficulties encountered in DIY robotics development.

1. Hardware Integration and Mechanical Design Complexity The physical embodiment of a robot presents the first major hurdle. Unlike plug-and-play electronics, robots demand intricate synergy between diverse hardware components. Selecting compatible motors, motor controllers (drivers), power supplies, sensors, and structural materials requires careful consideration of voltage, current draw, torque, weight, and physical constraints. Designing the chassis and mechanisms involves principles of mechanical engineering, statics, and dynamics. Ensuring structural rigidity while minimizing weight is crucial, especially for mobile robots. Poorly designed gearboxes, linkages, or wheel mounts can lead to inefficiency, excessive wear, vibration, or catastrophic failure under load. Precise calibration of sensors (like wheel encoders for odometry) relative to the robot's center and movement axes is essential for accurate navigation and control, demanding meticulous mechanical assembly skills often underestimated by beginners.

2. Sensor Fusion and Environmental Perception Ambiguity For a robot to interact intelligently with its world, it must perceive it accurately. DIY robots commonly use sensors like ultrasonic rangefinders, infrared proximity sensors, inertial measurement units (IMUs - accelerometers & gyroscopes), cameras, and sometimes LiDAR. Each sensor has limitations: ultrasonic sensors suffer from specular reflections and narrow cones; IR sensors are affected by ambient light and surface color; IMUs drift over time; cameras require significant processing power and struggle with varying lighting. The real challenge lies in sensor fusion – combining data from multiple, often noisy and conflicting sensors to build a coherent and reliable model of the environment. Implementing algorithms like Kalman or Particle Filters to fuse, say, IMU data with wheel odometry to estimate position (dead reckoning) is computationally intensive and mathematically complex. Interpreting camera data for object recognition or navigation adds another layer of difficulty involving computer vision techniques.

3. Power Management and Endurance Constraints Robots are power-hungry entities. Motors driving wheels or actuators consume significant current, especially under load or during acceleration. Sensors, microcontrollers (like Arduino or Raspberry Pi), and onboard computers (like Jetson Nano) add to the demand. Designing an efficient power distribution system that minimizes voltage drops and avoids noise interference on sensitive sensor lines is critical. However, the paramount challenge is battery technology and management. Batteries add considerable weight and bulk. Balancing the need for sufficient voltage, high current delivery (C-rating), and long operational time (capacity in Amp-hours) within weight and space constraints is difficult. Estimating remaining battery life accurately and implementing safe shutdown procedures before critical voltage levels are reached requires sophisticated battery management system (BMS) integration or custom monitoring circuits and software. Thermal management for batteries and high-power electronics during extended operation is another often-overlooked aspect.

4. Real-Time Control and Software Architecture The robot's "brain" – its software – orchestrates all hardware interactions and decision-making. This demands a robust software architecture. Key difficulties include:

- Real-time Responsiveness: Controlling motors based on sensor feedback (e.g., PID loops for speed or position control) requires precise timing. Delays can lead to instability (oscillation), poor performance, or collisions. Achieving hard real-time guarantees is challenging on general-purpose operating systems like Linux (used on Raspberry Pi), often necessitating Real-Time Operating Systems (RTOS) or careful prioritization on microcontrollers.

- Concurrency and Interrupt Handling: Robots must handle multiple simultaneous tasks: reading sensors, processing data, running control algorithms, making decisions, logging data. Managing these concurrent processes efficiently without conflicts (race conditions) or priority inversion requires careful programming, often involving multithreading, interrupts, and sophisticated synchronization mechanisms.

- Algorithm Implementation: Translating theoretical algorithms (like PID control, SLAM - Simultaneous Localization and Mapping, path planning A/D) into efficient, bug-free code that runs reliably on resource-constrained hardware is non-trivial. Debugging complex robotic behaviors, where hardware and software faults intertwine, can be exceptionally time-consuming.

5. Autonomy and Decision-Making Under Uncertainty Moving beyond remote control to true autonomy is the ultimate goal for many builders, but it's also the most challenging. This involves:

- Localization: Determining the robot's precise position and orientation within an environment, especially one that changes or lacks distinct landmarks, using imperfect sensor data (the core of SLAM).

- Mapping: Building an accurate representation of the environment while simultaneously localizing within it.

- Path Planning and Obstacle Avoidance: Calculating an optimal or feasible path from point A to B, dynamically replanning when encountering unforeseen obstacles, using sensor data that might be incomplete or noisy. Algorithms like potential fields or dynamic window approaches need careful tuning.

- Task Planning and Execution: Sequencing actions to achieve higher-level goals, handling failures gracefully (e.g., what to do if a path is blocked or an object isn't found). Implementing robust state machines or behavior trees is complex.

- Machine Learning Integration: While powerful, deploying ML models (for vision, navigation policy) on embedded hardware involves optimization for size and speed, managing training data collection specific to the robot's context, and ensuring reliable performance outside the training environment.

The technical challenges in DIY robotics are significant and interconnected. Success hinges on a systems engineering approach, recognizing that a weakness in one area (e.g., noisy sensors, sluggish control loops, poor power) cascades into failures elsewhere. While demanding, tackling these difficulties is immensely rewarding. The field benefits immensely from vibrant open-source communities (e.g., ROS - Robot Operating System), detailed project logs, affordable development boards, and accessible simulation tools (like Gazebo). By understanding and systematically addressing these core technical hurdles – hardware integration, sensor fusion, power management, real-time software, and autonomy algorithms – DIY roboticists transform ambitious ideas into tangible, intelligent machines, pushing the boundaries of what's possible in the garage workshop. The journey is arduous, but each obstacle overcome represents a profound leap in technical mastery.