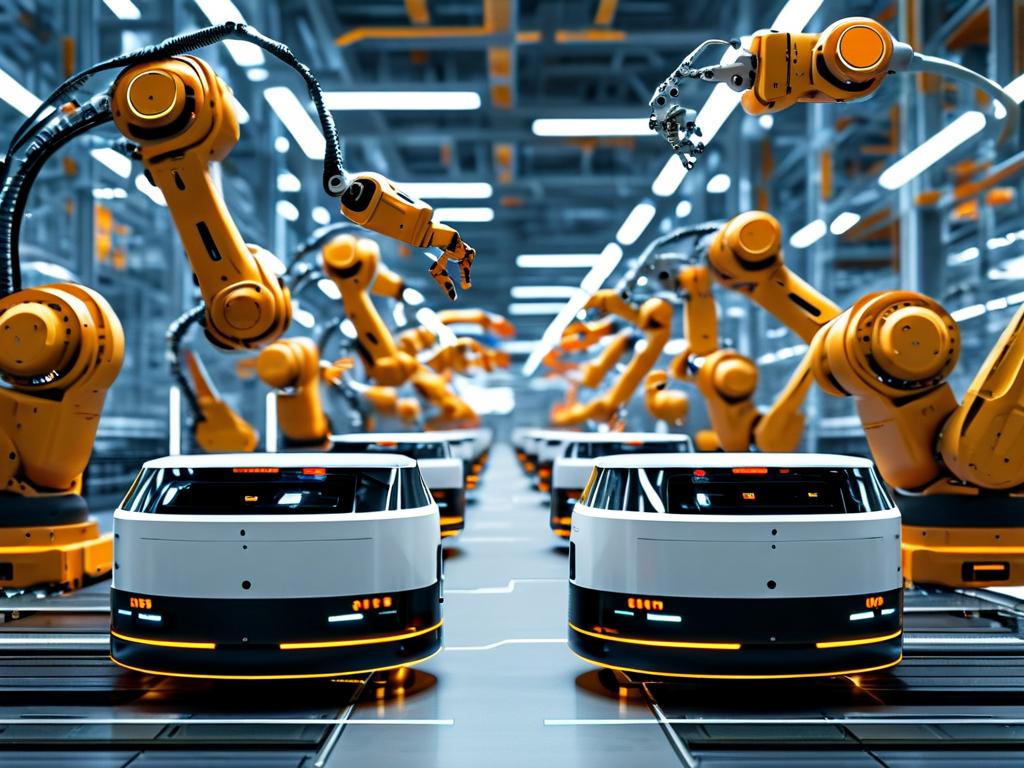

The rapid evolution of autonomous mobile robots (AMRs) has transformed industries ranging from logistics to healthcare. Unlike traditional automated guided vehicles (AGVs), AMRs operate without predefined paths, relying on advanced technologies to navigate dynamic environments. This article explores the technical foundations of AMRs, focusing on their navigation systems, sensor integration, and decision-making algorithms.

Navigation and Localization

At the heart of AMR functionality is simultaneous localization and mapping (SLAM). SLAM enables robots to create real-time maps of unfamiliar spaces while tracking their position within those maps. Using lidar, cameras, or ultrasonic sensors, AMRs generate point-cloud data to identify obstacles and open pathways. For instance, a robot in a warehouse might combine lidar scans with inertial measurement unit (IMU) data to correct positional drift, ensuring centimeter-level accuracy even during rapid movements.

Sensor Fusion and Environmental Awareness

Modern AMRs integrate multiple sensor modalities to enhance reliability. A typical setup includes 3D depth cameras for object recognition, torque sensors for collision detection, and infrared arrays for edge detection. Sensor fusion algorithms, such as Kalman filters, merge these inputs into a cohesive environmental model. Consider a hospital delivery AMR: it uses thermal sensors to avoid human contact while leveraging RFID tags to locate specific rooms, demonstrating how layered sensing adapts to diverse scenarios.

Path Planning and Dynamic Adaptation

Pathfinding in AMRs relies on algorithms like A* or Dijkstra’s for global route optimization, complemented by local planners like dynamic window approach (DWA) for real-time obstacle avoidance. When an unexpected barrier appears—say, a fallen box in a factory aisle—the robot recalculates paths within milliseconds. Some systems even employ machine learning to predict traffic patterns, reducing computational load during peak operational hours.

Control Systems and Actuation

Precision movement in AMRs is governed by closed-loop control systems. Brushless DC motors paired with optical encoders deliver torque and speed adjustments at 100Hz frequencies, enabling smooth acceleration curves. Omnidirectional wheels or Mecanum designs allow lateral movement, critical for tight spaces. For example, an e-commerce fulfillment robot might use differential drive for straight-line efficiency but switch to holonomic motion when maneuvering around narrow inventory racks.

Communication and Fleet Coordination

Industrial AMR fleets operate via centralized or decentralized architectures. In a decentralized system, robots negotiate pathways using mesh networks and protocols like ROS 2.0’s DDS middleware. This setup avoids single-point failures—if one robot malfunctions, others autonomously reroute. A case study from an automotive plant showed a 40% efficiency gain when AMRs shared real-time throughput data via 5G private networks, minimizing assembly line bottlenecks.

Energy Management and Sustainability

AMRs prioritize energy efficiency through adaptive power systems. Lithium iron phosphate (LiFePO4) batteries offer high cycle counts, while wireless charging pads enable opportunistic top-ups during idle periods. Advanced models use predictive analytics to schedule charging based on task queues. A logistics company reported a 30% reduction in energy costs after deploying AMRs with regenerative braking, which converts kinetic energy during deceleration into reusable power.

Challenges and Future Directions

Despite advancements, AMRs face hurdles like handling reflective surfaces or low-light conditions. Emerging solutions include terahertz imaging for material penetration and neuromorphic processors for low-power AI inference. Researchers are also exploring swarm intelligence paradigms, where AMRs collaboratively solve tasks without central oversight—a concept tested in disaster response simulations with promising results.

In , AMR technology represents a synergy of robotics, AI, and IoT, enabling machines to perceive, decide, and act autonomously. As compute power grows and sensor costs decline, these systems will increasingly permeate sectors demanding flexibility, safety, and efficiency. Understanding their technical underpinnings is essential for leveraging their full potential in tomorrow’s automated landscapes.