The rapid evolution of artificial intelligence (AI) has transformed industries, enabling businesses to harness predictive analytics, natural language processing, and machine learning at unprecedented scales. However, as organizations increasingly adopt AI, challenges related to scalability, security, and operational efficiency have emerged. One critical solution gaining traction is AI local deployment combined with automated deployment pipelines. This article explores how automating the deployment of AI models on local infrastructure addresses these challenges while unlocking new opportunities for enterprises.

The Rise of Local AI Deployment

Local deployment refers to hosting AI models and workflows on an organization's own servers or edge devices rather than relying solely on cloud-based solutions. This approach offers distinct advantages:

- Data Privacy and Compliance: Industries like healthcare, finance, and government require strict control over sensitive data. Local deployment ensures data never leaves the organization's infrastructure, aligning with regulations like GDPR and HIPAA.

- Reduced Latency: Real-time applications, such as autonomous systems or industrial IoT, demand instantaneous decision-making. Local deployment minimizes latency by processing data closer to its source.

- Cost Efficiency: While cloud services offer flexibility, recurring costs can escalate. Local deployment provides long-term savings for high-volume, predictable workloads.

Despite these benefits, manual deployment processes often hinder scalability. Configuring servers, managing dependencies, and updating models across distributed systems are time-consuming and error-prone. This is where automation becomes indispensable.

Automating Deployment: From Concept to Reality

Automated deployment streamlines the process of delivering AI models from development to production. Key components include:

- Containerization: Tools like Docker package AI models, libraries, and environments into portable containers, ensuring consistency across development, testing, and production stages.

- Orchestration: Platforms like Kubernetes automate the scaling, load balancing, and failover of containerized AI applications, even in hybrid cloud-local environments.

- CI/CD Pipelines: Continuous integration and continuous deployment (CI/CD) frameworks, such as Jenkins or GitLab CI, enable seamless updates. For example, a retrained model can be automatically validated and rolled out to edge devices without downtime.

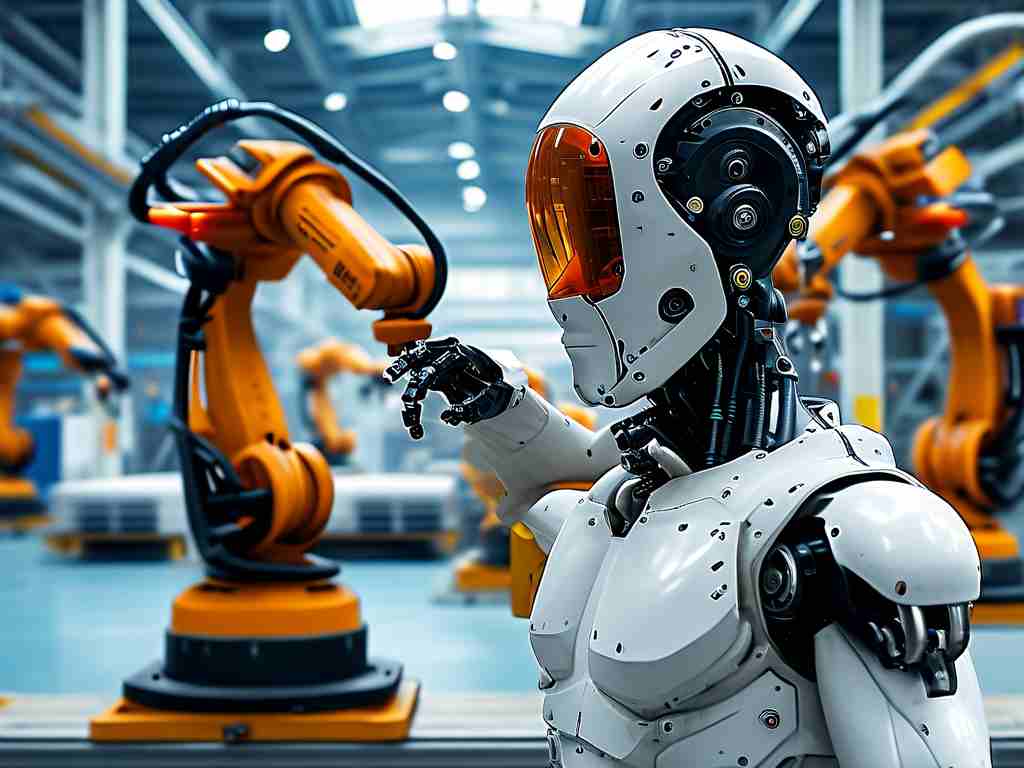

A case study from the manufacturing sector illustrates this synergy. A automotive company deployed computer vision models locally on factory robots to detect defects in real time. By automating deployment via Kubernetes, they reduced setup time by 70% and achieved zero-downtime updates during production hours.

Overcoming Challenges in Automated Local Deployment

While automation offers transformative potential, organizations must address several hurdles:

- Infrastructure Complexity: Hybrid environments (combining local and cloud resources) require robust networking and monitoring. Solutions like Terraform for infrastructure-as-code (IaC) help standardize configurations.

- Security Risks: Automated pipelines can become attack vectors. Implementing signed containers, role-based access control (RBAC), and vulnerability scanning tools like Clair mitigates these risks.

- Skill Gaps: Teams need expertise in DevOps, MLOps, and cybersecurity. Partnerships with AI platform providers or upskilling programs are critical.

The Road Ahead: Intelligent Automation and Edge AI

The future of AI local deployment lies in smarter automation. Emerging trends include:

- AI-Driven Orchestration: Systems that dynamically allocate resources based on workload predictions.

- Federated Learning: Automating model training across decentralized edge devices while preserving data privacy.

- Self-Healing Systems: Deployments that automatically detect and resolve issues, such as model drift or hardware failures.

Companies like NVIDIA and Intel are pioneering edge AI frameworks that integrate automated deployment, such as NVIDIA's Fleet Command and Intel's OpenVINO. These platforms simplify managing distributed AI workloads while maintaining security.

Automating AI local deployment is no longer optional for enterprises seeking competitive agility. By combining containerization, orchestration, and CI/CD, organizations can deploy robust, scalable, and secure AI solutions faster than ever. As edge computing and intelligent automation evolve, businesses that invest in these technologies today will lead tomorrow's data-driven economy. The key lies in balancing innovation with risk management-ensuring that automation serves as a bridge to the future, not a bottleneck.