In the digital age, computers have become indispensable tools, and their efficiency hinges on how they manage resources. Among these resources, memory space and address management form the bedrock of computational operations. This article explores the intricacies of computer memory architecture, the role of memory addresses, and how modern systems optimize these components to deliver seamless performance.

1. The Concept of Memory Space

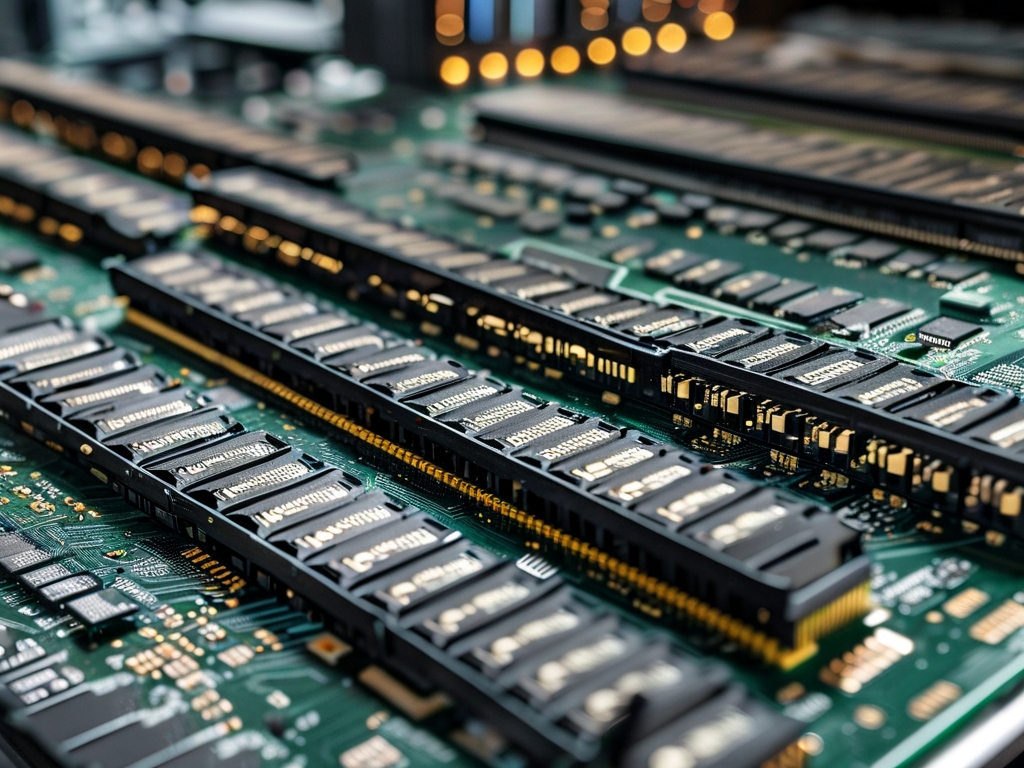

Computer memory refers to physical or virtual storage that holds data and instructions temporarily or permanently during processing. Memory space is categorized into two primary types: volatile memory (e.g., RAM) and non-volatile memory (e.g., ROM, SSDs). Volatile memory loses data when power is removed, while non-volatile memory retains information.

Modern computers use a hierarchical memory structure to balance speed, cost, and capacity:

- Registers: The fastest and smallest memory units embedded in CPUs.

- Cache: L1, L2, and L3 caches act as buffers between registers and RAM.

- RAM (Random Access Memory): Primary workspace for active programs.

- Secondary Storage: Hard drives or SSDs for long-term data retention.

This hierarchy ensures frequently accessed data stays in faster memory layers, reducing latency and improving efficiency.

2. Memory Addresses: The Navigation System

Every byte in a computer's memory is assigned a unique identifier called a memory address. These addresses act like postal codes, enabling the CPU to locate and retrieve data precisely. Two critical address types exist:

- Physical Addresses: Direct references to hardware memory locations.

- Logical Addresses: Abstract addresses generated by software, translated to physical addresses via the Memory Management Unit (MMU).

For example, in a 32-bit system, addresses range from 0x00000000 to 0xFFFFFFFF, allowing access to 4 GB of memory. 64-bit systems expand this limit to exabytes, though practical implementations rarely utilize the full range.

3. Address Management Mechanisms

Efficient address management prevents conflicts and optimizes resource allocation. Key techniques include:

a. Virtual Memory

Virtual memory creates an illusion of abundant memory by using secondary storage as an extension of RAM. When RAM fills up, less frequently used data is swapped to a page file on the disk. The MMU maps virtual addresses to physical ones, enabling multitasking without memory exhaustion.

b. Memory Paging and Segmentation

- Paging: Divides memory into fixed-size blocks (pages) and maps them to physical frames. This minimizes fragmentation.

- Segmentation: Splits memory into variable-sized segments based on logical units (e.g., code, stack, heap).

Both methods are often combined in modern operating systems for flexibility.

c. Dynamic Memory Allocation

Programs request memory dynamically during runtime using system calls like malloc or new. The OS allocates blocks from the heap and tracks free space using algorithms like first-fit or best-fit. Poor allocation strategies can lead to memory leaks or fragmentation, degrading performance.

4. Challenges in Memory Management

Despite advancements, memory systems face persistent challenges:

- Fragmentation: Wasted space due to discontinuous memory blocks.

- Security Vulnerabilities: Buffer overflows or dangling pointers can expose sensitive data.

- Scalability: Managing exponentially growing data in AI and big data applications.

Solutions include garbage collection (automated memory reclamation), address space layout randomization (ASLR) for security, and non-uniform memory access (NUMA) architectures for multi-processor systems.

5. The Future of Memory Systems

Emerging technologies aim to revolutionize memory design:

- Persistent Memory: Blurs the line between RAM and storage (e.g., Intel Optane).

- Quantum Memory: Exploits quantum states for ultra-fast, parallel processing.

- Neuromorphic Computing: Mimics the human brain's efficiency in memory and computation.

These innovations promise to address bottlenecks in speed, energy consumption, and capacity.

6.

Memory space and address management are pivotal to computing performance. From the basic principles of addressing to advanced virtual memory systems, these concepts enable everything from simple calculators to AI-driven supercomputers. As technology evolves, so will memory architectures, ensuring they remain the unsung heroes of the digital revolution. Understanding these fundamentals empowers developers to write efficient code and engineers to design next-generation systems.