With the exponential growth of data-intensive applications in artificial intelligence, climate modeling, and real-time analytics, effective management of ultra-large memory systems has become the backbone of modern computing infrastructure. This article explores cutting-edge strategies and architectural innovations reshaping how organizations handle memory resources exceeding petabyte-scale capacities.

The evolution of non-uniform memory access (NUMA) architectures has laid the foundation for contemporary massive memory management. Unlike traditional symmetric multiprocessing systems, NUMA-based solutions allow clustered servers to access shared memory pools through high-speed interconnects like Compute Express Link (CXL). Recent implementations demonstrate 40% latency reduction when handling memory pages exceeding 512GB through adaptive page migration algorithms that predict workload patterns using lightweight machine learning models.

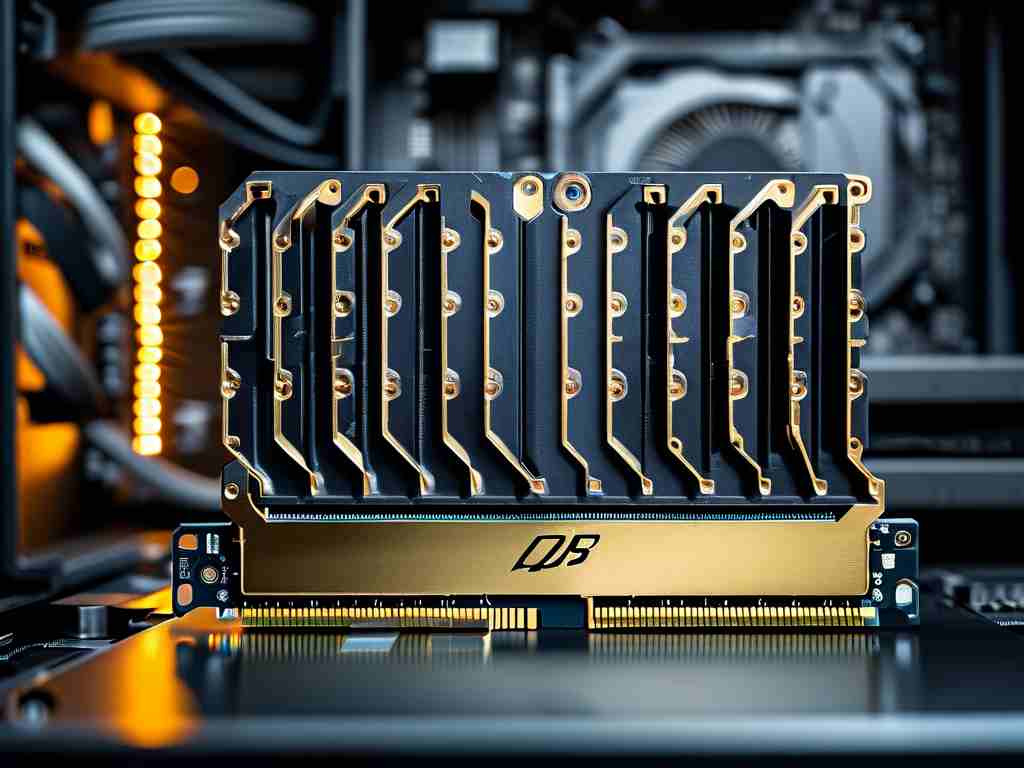

A critical breakthrough comes from hybrid memory stacking techniques combining DRAM with persistent memory modules. Intel's Optane DC Persistent Memory deployments in supercomputing clusters show 28% better throughput when managing 16TB+ memory configurations through byte-addressable access layers. This approach enables in-memory databases like SAP HANA to maintain sub-millisecond response times while processing 50 billion records without disk swapping.

Software-defined memory virtualization has emerged as a game-changer for cloud-native environments. VMware's Project Capitola demonstrates how disaggregated memory resources can be dynamically allocated across GPU clusters and CPU nodes through a unified management layer. Early adopters report 63% improvement in memory utilization rates when handling mixed workloads of AI training and real-time inference tasks.

The rise of photonic memory interconnects introduces new possibilities. Experimental systems using silicon photonics achieve 8Tbps memory bandwidth between processing units and 128TB memory banks, effectively eliminating traditional von Neumann bottlenecks. CERN's prototype for particle collision analysis leverages this technology to maintain 99.8% memory availability during petabyte-scale data processing tasks.

Memory error correction at extreme scales presents unique challenges. Advanced error-correcting code (ECC) architectures now employ three-dimensional parity checking with real-time fault mapping. Fujitsu's A64FX processors implement this in their 32GB HBM2 stacks, achieving error rates below 1×10^-18 errors/bit-hour during exascale computations.

Energy efficiency remains paramount in large-memory systems. Novel power-gating techniques using application-aware voltage regulation can reduce memory subsystem power consumption by 35% in idle states. Google's TPU v4 deployments utilize spatial memory partitioning that dynamically activates only required memory banks during tensor computations.

Looking ahead, neuromorphic memory architectures promise to revolutionize pattern recognition workloads. IBM's TrueNorth prototype demonstrates in-memory computing capabilities where 256 million programmable synapses perform analog computations directly within memory cells, eliminating traditional data movement overhead.

For developers working with massive memory systems, practical optimization strategies include:

// Sample code for NUMA-aware memory allocation in Linux

#include <numaif.h>

void* numa_alloc_mem(size_t size, int node) {

return numa_alloc_onnode(size, node);

}

This code snippet illustrates node-specific memory allocation in heterogeneous computing environments, crucial for minimizing cross-node memory access penalties.

Industry benchmarks reveal that optimized memory management can deliver 22-47% performance improvements across various workloads. As quantum computing interfaces with classical systems evolve, hybrid memory architectures will play a pivotal role in bridging classical and quantum processing units.

The future of ultra-large memory management lies in intelligent, self-optimizing systems that integrate hardware telemetry with predictive analytics. With memory densities projected to reach 1TB per DIMM by 2026, continuous innovation in management paradigms will remain critical for unlocking the full potential of next-generation computing platforms.