In the realm of modern computing, independent memory plays a pivotal role in optimizing performance and enabling complex tasks. Unlike shared or integrated memory systems, independent memory refers to dedicated memory modules that operate separately from the central processing unit (CPU). This architecture is commonly found in graphics cards (GPUs), specialized hardware accelerators, and high-performance computing systems. But how exactly is independent memory utilized, and what advantages does it offer?

To begin with, independent memory is designed to handle specific workloads without relying on the system’s primary RAM. For instance, a GPU with its own dedicated GDDR6 memory processes graphical data independently, freeing the CPU and system memory for other operations. This separation reduces bottlenecks in data-intensive applications like gaming, video rendering, or machine learning. By allocating resources directly to the hardware that needs them, latency is minimized, and throughput is maximized.

One practical example of independent memory usage is in gaming. Modern games require rapid access to high-resolution textures and 3D models. A GPU with independent memory stores these assets locally, allowing for faster rendering compared to fetching data from the system’s RAM. This setup not only improves frame rates but also enhances visual fidelity. Similarly, in scientific computing, accelerators like FPGAs or TPUs use independent memory to manage large datasets for simulations or neural network training, ensuring efficient parallel processing.

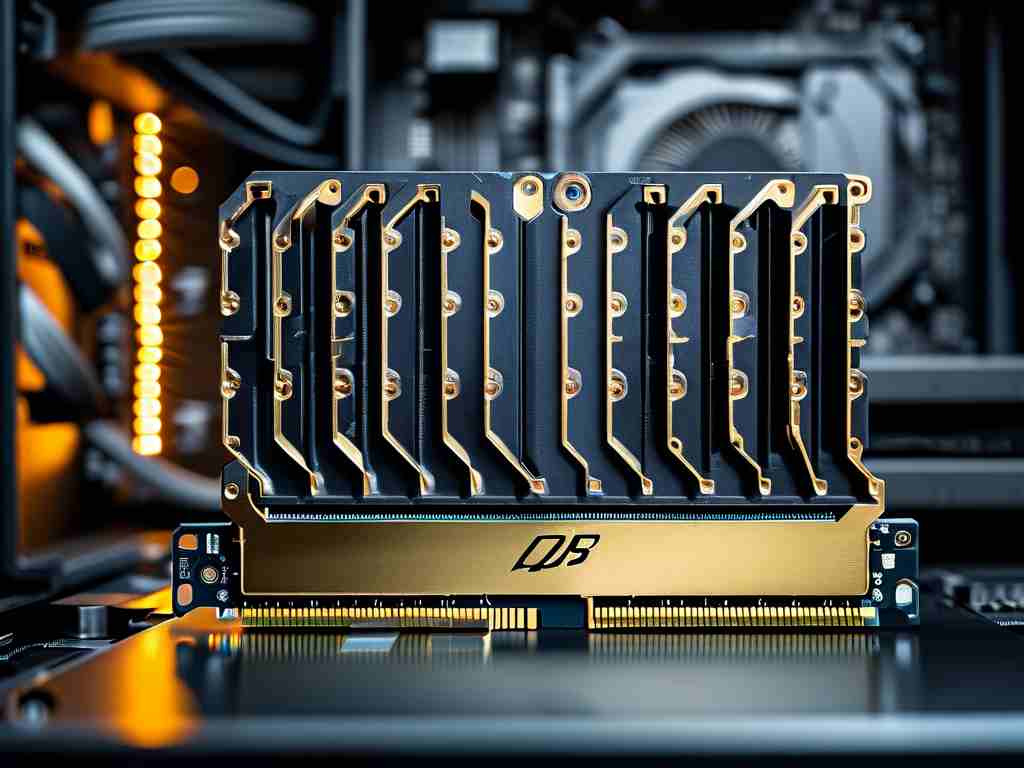

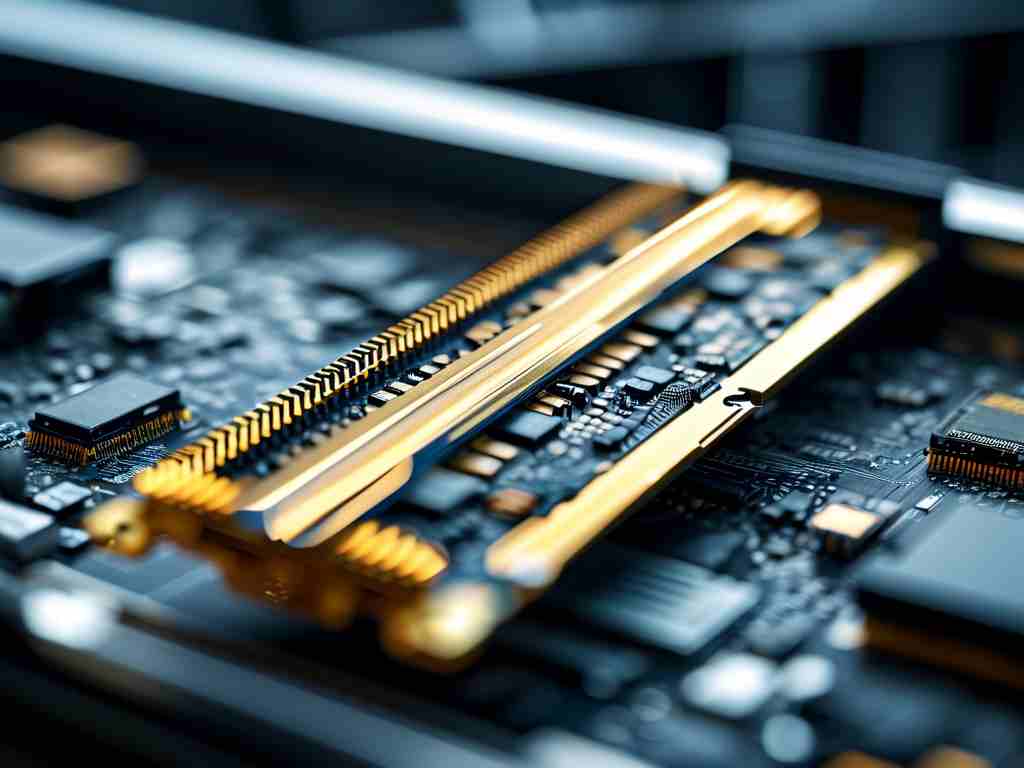

Implementing independent memory involves both hardware and software considerations. On the hardware side, devices must be equipped with compatible memory modules, such as VRAM for GPUs or HBM for AI chips. These modules are soldered onto the device or installed via specialized slots. Software drivers then facilitate communication between the device and the operating system, ensuring proper memory allocation and synchronization. For developers, optimizing code to leverage independent memory often involves using APIs like CUDA for NVIDIA GPUs or DirectML for machine learning tasks.

A key advantage of independent memory is scalability. Systems can be upgraded by adding more memory modules or higher-capacity hardware. For example, upgrading a GPU’s VRAM from 8GB to 16GB allows it to handle larger textures or higher-resolution outputs. This flexibility is crucial for industries like film production or data analytics, where requirements evolve rapidly.

However, independent memory is not without challenges. Cost is a significant factor, as dedicated memory hardware tends to be more expensive than shared alternatives. Additionally, thermal management becomes critical, as high-speed memory modules generate substantial heat. Engineers must balance performance gains with power consumption and cooling solutions.

Looking ahead, advancements in memory technology—such as DDR5, LPDDR5X, and emerging non-volatile solutions—are pushing the boundaries of what independent memory can achieve. These innovations promise faster data transfer rates, lower latency, and greater energy efficiency, further cementing independent memory’s role in next-generation computing.

In summary, independent memory is a cornerstone of high-performance computing, enabling specialized hardware to operate efficiently and effectively. By understanding its applications and optimizing its use, developers and users alike can unlock new levels of speed and capability in their systems.