In the realm of computer science, memory classification plays a pivotal role in optimizing system performance and resource management. Modern computing architectures rely on a hierarchical organization of memory types, each designed to serve specific functions while balancing speed, capacity, and cost. This article delves into the principles of memory classification, its impact on computational efficiency, and emerging trends reshaping how systems handle data storage and retrieval.

At its core, memory classification refers to the systematic categorization of storage components based on their operational characteristics. The primary divisions include volatile and non-volatile memory. Volatile memory, such as Random Access Memory (RAM), temporarily holds data during active computations but loses its contents when power is interrupted. In contrast, non-volatile memory—like Read-Only Memory (ROM) or flash storage—retains information even without electrical supply. This dichotomy ensures that critical system instructions remain intact while enabling rapid data manipulation during runtime.

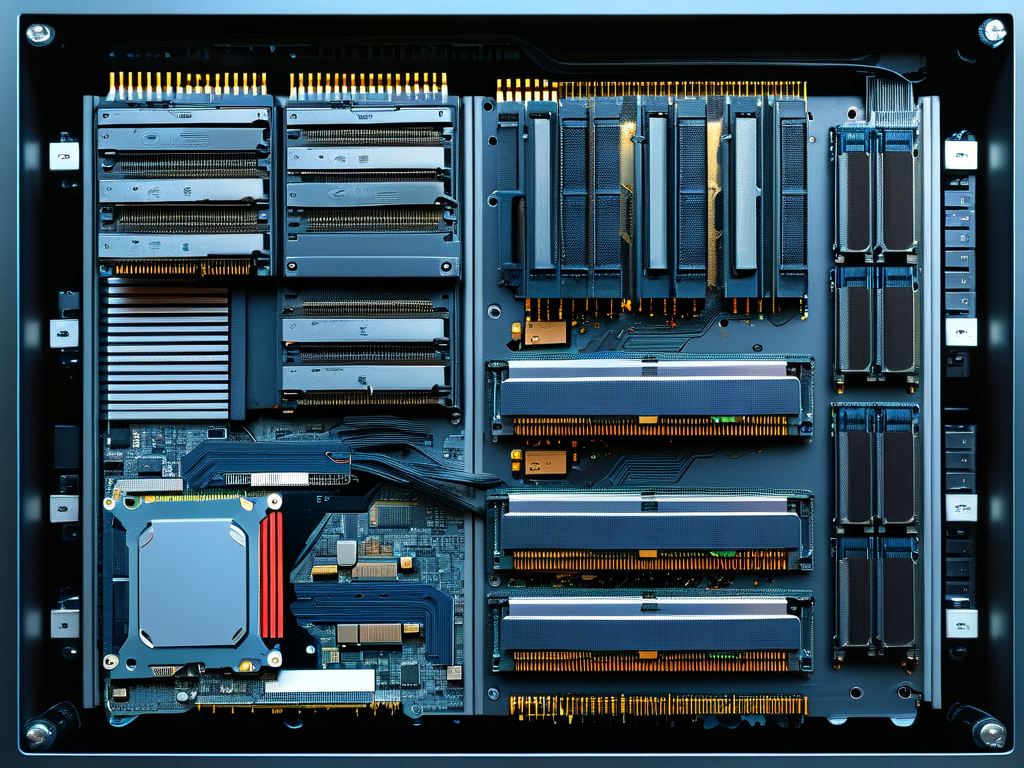

A deeper layer of classification involves cache memory, primary memory, and secondary storage. Cache memory, embedded directly into processors, operates at near-CPU speeds to minimize latency for frequently accessed data. Primary memory (e.g., RAM) acts as the workspace for active applications, while secondary storage (hard drives, SSDs) provides long-term data persistence. Modern systems employ sophisticated algorithms to predict and preload data into faster memory tiers, a process exemplified by technologies like Intel’s Smart Cache or AMD’s Infinity Cache.

The evolution of memory technologies has introduced hybrid models. For instance, Non-Volatile RAM (NVRAM) blends the speed of traditional RAM with the permanence of storage devices. Emerging standards like Compute Express Link (CXL) further blur these boundaries by enabling coherent memory sharing across CPUs, GPUs, and accelerators. Such advancements challenge conventional classification frameworks, necessitating adaptive system designs.

Memory hierarchy also influences software development. Programmers must consider memory locality—the tendency of applications to access clustered data regions—to optimize performance. Techniques like buffer pooling or memory-mapped files leverage classification principles to reduce I/O bottlenecks. Below is a simplified code snippet demonstrating memory allocation prioritization in C++:

#include <iostream>

#include <vector>

void processHighPriorityData() {

// Allocate fast-access memory for critical tasks

std::vector<int> buffer(1024, 0);

// ... processing logic ...

}

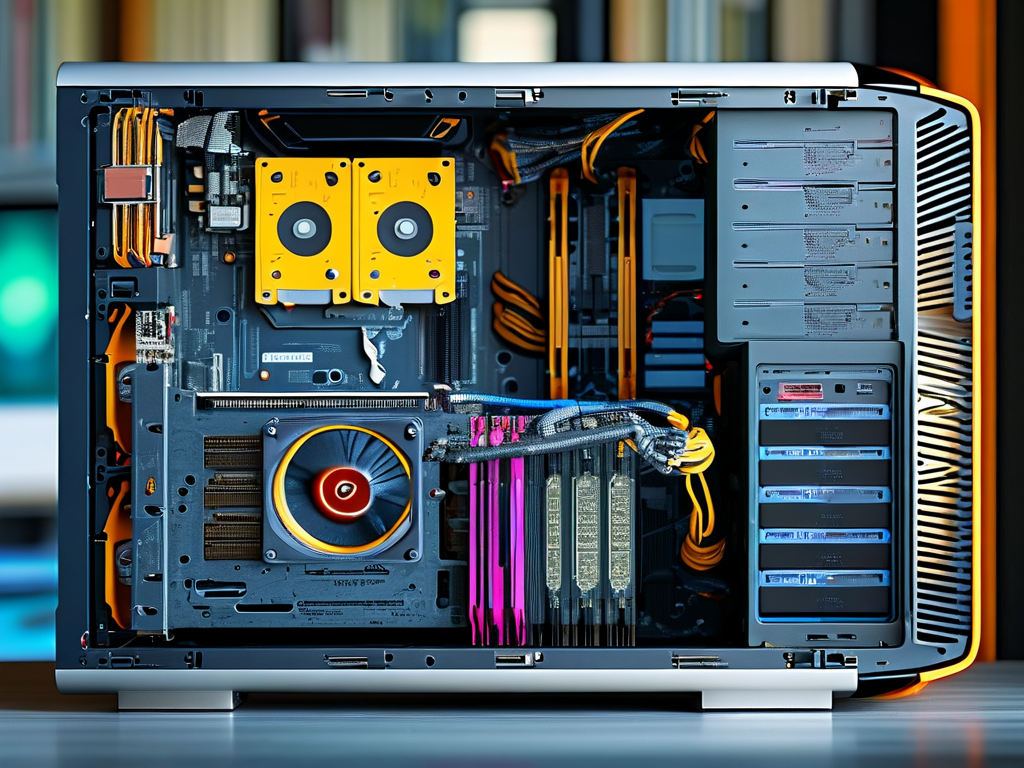

Industry applications reveal practical implications. In high-frequency trading systems, nanosecond-level access to cache memory can determine profit margins. Meanwhile, embedded systems in IoT devices prioritize non-volatile memory to sustain operation during power fluctuations. Recent research focuses on processing-in-memory (PIM) architectures, where computation occurs within memory cells to mitigate data transfer delays—a paradigm shift exemplified by Samsung’s HBM-PIM chips.

Despite these innovations, challenges persist. The von Neumann bottleneck—a limitation in data throughput between processors and memory—remains a critical concern. Solutions like 3D-stacked memory (e.g., HBM2e) vertically integrate memory layers closer to CPUs, significantly boosting bandwidth. Additionally, quantum computing introduces radical memory concepts, such as qubit-based storage, though practical implementations remain years away.

Environmental factors also shape memory classification strategies. Energy-efficient designs now prioritize low-power DDR (LPDDR) modules in mobile devices, while data centers adopt storage-class memory (SCM) to balance performance with energy consumption. These trends underscore the interplay between technological capability and sustainability imperatives.

In , memory classification serves as the backbone of modern computing, enabling systems to harmonize speed, capacity, and reliability. As architectures evolve toward heterogeneous and distributed models, understanding these classifications becomes essential for developers, engineers, and policymakers alike. Future advancements will likely redefine existing categories, but the fundamental goal—efficient data management—will endure as a cornerstone of computational progress.