In the realm of computer science, memory serves as the backbone of computational processes, enabling devices to store and retrieve data efficiently. But how exactly do internal computing systems define and manage memory? This article delves into the technical and conceptual frameworks that underpin memory allocation, addressing mechanisms, and the role of memory in modern computing architectures.

The Concept of Memory in Computing

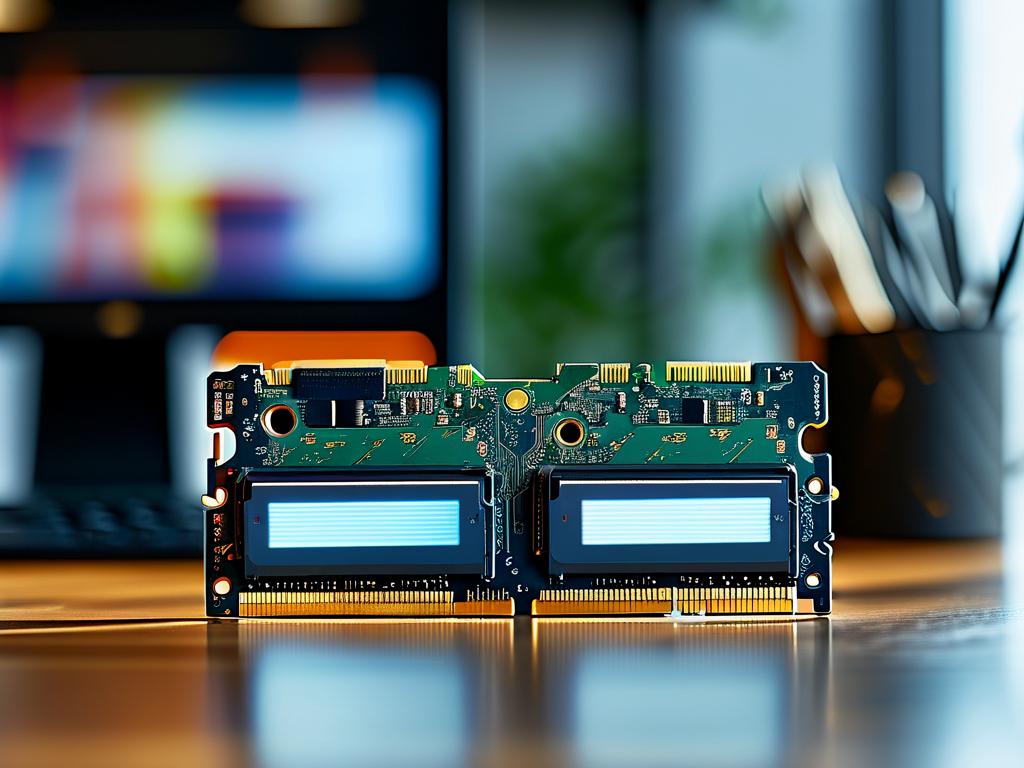

Memory, in its simplest form, refers to the electronic components within a computer that retain digital information. Unlike storage devices such as hard drives or SSDs, which hold data long-term, memory operates at high speeds to support real-time processing. Internal memory is typically categorized into two types: volatile (e.g., RAM) and non-volatile (e.g., ROM). Volatile memory loses its data when power is disconnected, while non-volatile memory retains information indefinitely.

Physical vs. Virtual Memory

A critical distinction lies between physical and virtual memory. Physical memory consists of actual hardware components like RAM chips, which interact directly with the CPU. Virtual memory, on the other hand, is a software-based abstraction that allows systems to use secondary storage (e.g., a hard drive) as an extension of physical memory. This enables computers to handle larger applications than the physical RAM alone could support. For instance, when RAM becomes full, the operating system swaps less frequently used data to a designated space on the storage drive, a process known as paging.

Memory Addressing and Allocation

To access data, computers rely on memory addresses—unique identifiers assigned to each byte in memory. These addresses are managed through a combination of hardware and software mechanisms. The memory management unit (MMU), a component of the CPU, translates logical addresses (used by software) into physical addresses (used by hardware). This translation ensures that applications operate within their allocated memory spaces without interfering with other processes.

Memory allocation strategies vary depending on the system’s requirements. Static allocation reserves fixed memory blocks during compile time, often used for variables with predictable lifetimes. Dynamic allocation, managed via functions like malloc() in C or new in C++, assigns memory during runtime, offering flexibility but requiring careful oversight to prevent leaks or fragmentation.

The Role of Memory Hierarchies

Modern systems employ a memory hierarchy to balance speed, capacity, and cost. At the top are small, ultra-fast registers within the CPU, followed by cache memory (L1, L2, L3), RAM, and finally secondary storage. Each layer trades speed for capacity, ensuring that frequently accessed data remains readily available. For example, cache memory stores copies of frequently used RAM locations, reducing latency when the CPU retrieves data.

Challenges in Memory Management

Efficient memory management is fraught with challenges. Fragmentation—where free memory becomes divided into small, non-contiguous blocks—can degrade performance over time. Memory leaks, caused by programs failing to release unused memory, gradually consume available resources. To mitigate these issues, garbage collection algorithms (e.g., in Java or Python) automatically reclaim unused memory, while defragmentation tools reorganize stored data.

Case Study: Memory in Embedded Systems

In embedded systems, where resources are constrained, memory definition takes on added complexity. Microcontrollers often combine RAM, ROM, and flash memory on a single chip. Developers must optimize memory usage to avoid overflows, which could crash the system. Techniques like memory pooling—pre-allocating fixed-size blocks—help ensure predictable behavior in real-time applications.

The Future of Memory Technology

Emerging technologies promise to reshape how internal systems define and utilize memory. Non-volatile RAM (NVRAM), such as Intel’s Optane, blurs the line between memory and storage by offering persistence at near-RAM speeds. Quantum computing introduces entirely new paradigms, where qubits replace binary bits, though practical implementations remain years away.

Memory is not merely a storage space but a dynamic resource that dictates a system’s capabilities. From addressing schemes to hierarchical designs, its definition evolves alongside advancements in hardware and software. Understanding these principles is essential for developers, engineers, and enthusiasts aiming to optimize performance or innovate within the computing landscape.