The complexity of distributed database architecture remains a pivotal topic in modern computing infrastructure. As organizations scale their data operations, understanding the intricate balance between performance optimization and architectural manageability becomes critical. This article examines core challenges while providing practical insights for technical decision-makers.

At its foundation, distributed database systems fragment data across multiple nodes to achieve horizontal scalability. This fragmentation introduces inherent complexity through mechanisms like sharding, replication protocols, and consensus algorithms. A typical implementation might involve coordinating read/write operations across geographically dispersed servers while maintaining ACID properties - a task requiring sophisticated synchronization techniques.

Consider this simplified code representation of a sharding strategy:

class ShardManager:

def __init__(self, nodes):

self.node_ring = consistent_hash(nodes)

def route_request(self, key):

target_node = self.node_ring.get_node(key)

return target_node.process(key)

While pseudocode illustrates basic routing logic, real-world implementations must handle node failures, network partitions, and dynamic cluster reconfigurations - aspects that exponentially increase system complexity.

Three primary dimensions contribute to architectural intricacy:

-

Consistency Models: The CAP theorem dictates unavoidable tradeoffs between consistency, availability, and partition tolerance. Systems prioritizing immediate consistency (like Google Spanner) employ complex timestamp-based synchronization, while eventual consistency models (as seen in Cassandra) shift complexity to application-layer conflict resolution.

-

Failure Handling: Distributed systems require robust failure detection and recovery mechanisms. Techniques like Raft consensus algorithm or Paxos protocol implementation demonstrate how coordination complexity grows with cluster size. A 2023 study by the Distributed Systems Research Group revealed that error handling code constitutes 38% of typical distributed database codebases.

-

Query Optimization: Cross-node query execution demands advanced cost-based optimizers. Distributed joins and aggregations necessitate intelligent query plan generation that minimizes data transfer between nodes. PostgreSQL's Foreign Data Wrapper extension demonstrates this challenge through its multi-server query planning architecture.

The operational complexity manifests differently across deployment models. Cloud-managed services (AWS Aurora, Cosmos DB) abstract underlying complexities through automated scaling and maintenance, while on-premises solutions (CockroachDB, YugabyteDB) demand deeper infrastructure expertise. A financial institution's migration case study showed 72% reduced operational overhead when adopting managed services versus self-hosted clusters.

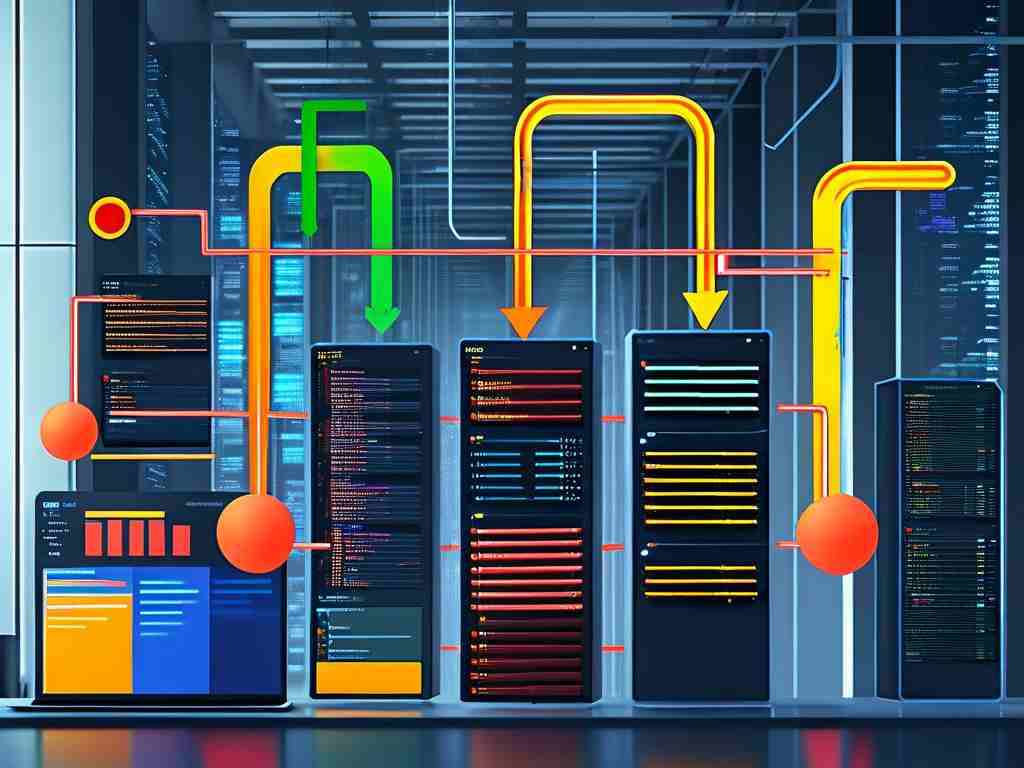

Performance optimization introduces additional layers of complexity. Techniques like vectorized query execution and columnar storage must be adapted for distributed environments. The diagram below illustrates how distributed query processing amplifies traditional database optimization challenges:

[Client] --> [Query Planner] --> [Node 1: Data Shard A]

|-------> [Node 2: Data Shard B]

|-------> [Node 3: Data Shard C]Each stage introduces potential bottlenecks requiring careful resource allocation and monitoring.

Security considerations further compound system complexity. Implementing consistent encryption across nodes, managing distributed authentication, and maintaining audit trails in fragmented environments present unique challenges. The recent OWASP Distributed Systems Security Guidelines emphasize the need for defense-in-depth strategies spanning multiple architectural layers.

While complexity appears daunting, modern tooling significantly mitigates implementation challenges. Orchestration frameworks like Kubernetes facilitate containerized database deployments, while observability platforms (Prometheus, Grafana) provide crucial visibility into distributed transactions. The key lies in aligning architectural choices with specific workload requirements - not all applications need extreme scalability, and some may benefit more from simplified architectures.

Emerging trends continue reshaping the complexity landscape. Serverless database architectures and intelligent auto-sharding mechanisms promise to reduce operational burdens. However, as noted in the 2024 Database Engineering Symposium, these innovations simply shift complexity rather than eliminate it - from infrastructure management to vendor lock-in considerations and advanced cost optimization strategies.

Ultimately, distributed database complexity proves both inevitable and manageable. Through careful technology selection, phased implementation, and continuous performance tuning, organizations can harness distributed architectures' power while maintaining operational stability. The complexity becomes not a barrier, but a spectrum of technical tradeoffs requiring informed navigation.