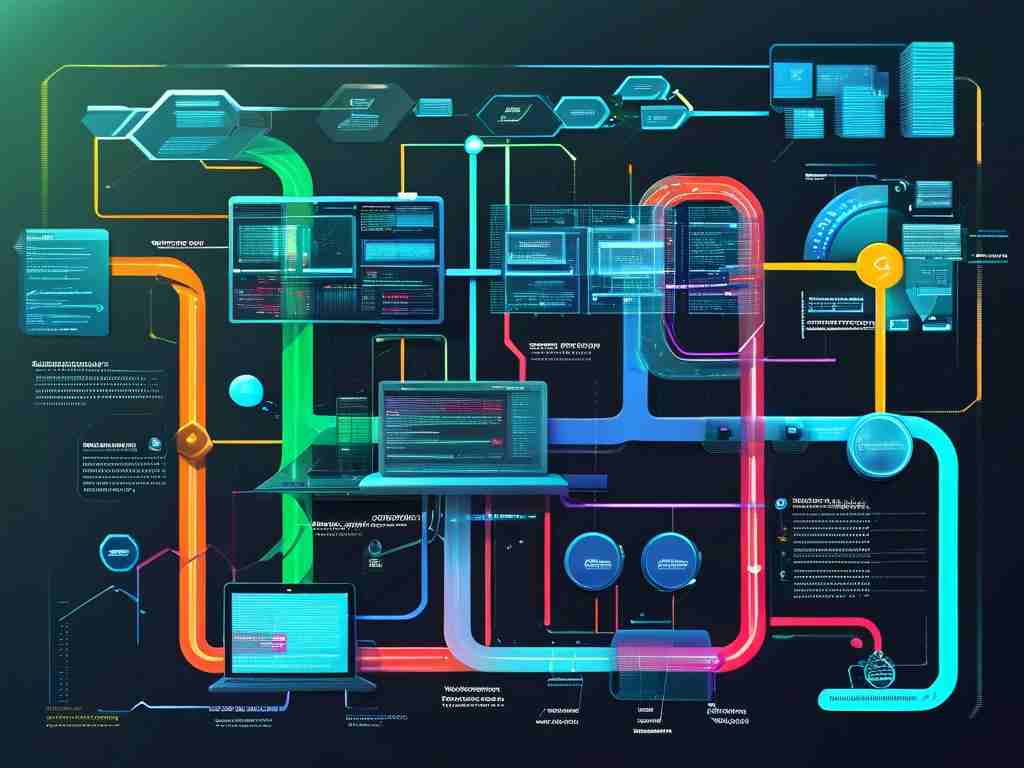

Load balancing technology is a critical component in modern computing systems, designed to distribute network traffic efficiently across multiple servers. This prevents any single server from becoming overwhelmed, ensuring high availability and reliability. The concept revolves around a line diagram that visually maps out how requests flow from clients to backend resources. In this article, we'll explore the fundamentals of load balancing, break down a typical line diagram, and provide practical insights for implementation.

At its core, a load balancer acts as a traffic cop, sitting between users and a group of servers. The line diagram for this setup usually starts with clients sending requests over the internet. These requests hit the load balancer first, which then routes them to available servers based on predefined algorithms. For instance, a common algorithm is round-robin, where each server gets an equal share of traffic in sequence. Another is least connections, directing requests to the server with the fewest active sessions. This diagram often includes arrows showing the path: clients → load balancer → server pool. It's a simple yet powerful visualization that highlights redundancy and scalability, as adding more servers can be depicted as new branches in the diagram.

There are two main types of load balancers: hardware-based and software-based. Hardware load balancers are physical devices optimized for high performance, often used in large enterprises. They handle massive traffic volumes with minimal latency. Software load balancers, on the other hand, run on standard servers or virtual machines, making them cost-effective and flexible. Popular examples include Nginx and HAProxy, which can be configured with code snippets. For instance, here's a basic Nginx configuration snippet for load balancing:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}This code sets up a simple round-robin distribution to two backend servers, demonstrating how the line diagram translates into real-world setups. The diagram complements this by showing how Nginx intercepts requests and forwards them, preventing bottlenecks.

The benefits of understanding this line diagram are immense. It helps in optimizing resource usage, reducing downtime, and improving user experience. For example, if one server fails, the load balancer redirects traffic to others, as shown in the diagram with failover paths. This is crucial for e-commerce sites or cloud services where uptime is vital. Moreover, the diagram can include security elements like firewalls or SSL termination, adding layers of protection.

In practice, implementing load balancing involves choosing the right algorithm and monitoring tools. Tools like Prometheus or Grafana can track metrics such as response times and error rates, feeding back into the diagram for continuous improvement. As networks evolve, this line diagram adapts to include microservices or containerized environments, where load balancers manage traffic between pods in Kubernetes clusters.

To sum up, mastering the load balancing line diagram empowers IT professionals to build resilient systems. It's not just a static image but a dynamic blueprint for innovation. By studying and applying these concepts, businesses can achieve seamless scalability and fault tolerance, paving the way for future advancements in distributed computing.