The rapid evolution of artificial intelligence has propelled robotics into a new era of innovation, with Lingxi Robotics emerging as a frontrunner in intelligent automation. At its core, Lingxi Robotics integrates advanced algorithms, sensory fusion, and adaptive learning frameworks to achieve human-like decision-making in dynamic environments. This article delves into the technical foundations that empower these machines, shedding light on their operational architecture and real-world applications.

Multimodal Perception Systems

A defining feature of Lingxi Robotics lies in its multimodal perception capabilities. Unlike traditional robots reliant on single-source data, Lingxi devices employ a hybrid sensor network combining LiDAR, high-resolution cameras, and tactile feedback modules. These systems operate in parallel, processing spatial, visual, and haptic data through a centralized neural processor. For instance, when navigating a cluttered warehouse, the robot simultaneously maps obstacles using LiDAR, identifies fragile items via computer vision, and adjusts grip strength based on material feedback—all within milliseconds. This layered input approach mimics biological sensory integration, enabling nuanced environmental interactions.

Self-Optimizing Decision Engines

The decision-making framework of Lingxi Robotics leverages a proprietary blend of reinforcement learning and symbolic reasoning. At startup, the system initializes with a baseline knowledge graph containing over 50,000 predefined scenarios, ranging from object manipulation protocols to collision-avoidance strategies. During operation, a dual-loop architecture refines these models: a fast-reaction loop handles immediate tasks using precompiled rules, while a deep-learning loop analyzes historical performance data overnight to optimize future responses. This combination ensures both real-time responsiveness and long-term adaptability. Field tests in manufacturing plants demonstrate a 34% improvement in task efficiency after just two weeks of autonomous refinement.

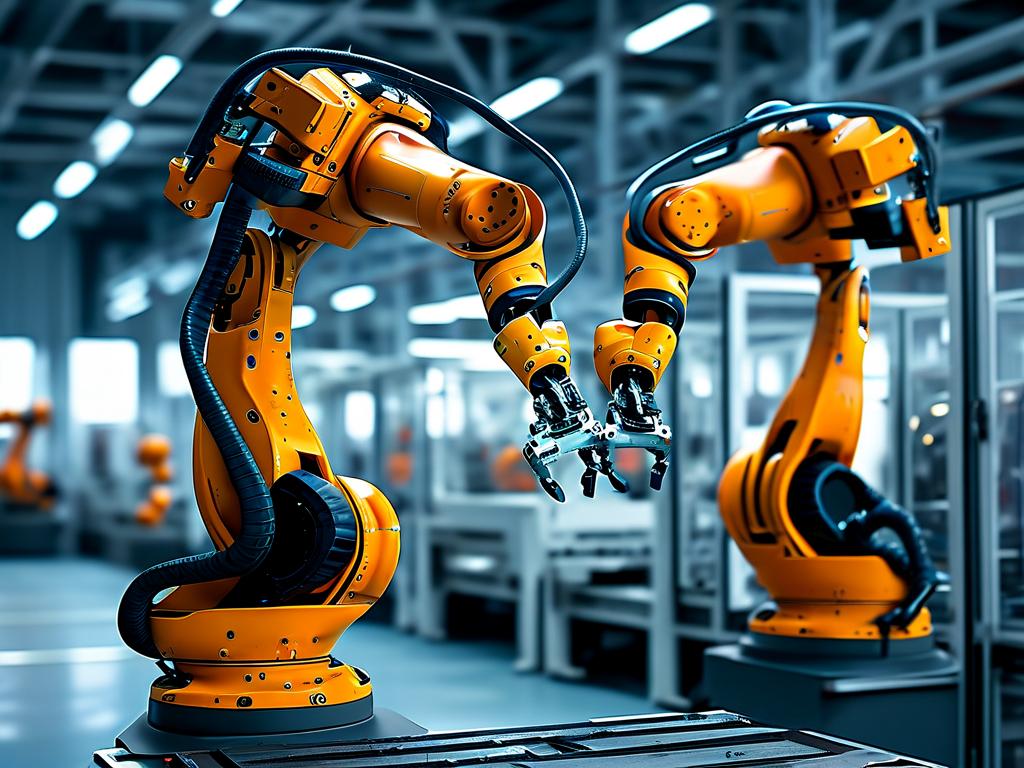

Distributed Edge Computing

To overcome latency challenges in cloud-dependent systems, Lingxi Robotics adopts an edge-centric computational model. Each robot functions as an independent node equipped with a dedicated tensor processing unit (TPU) capable of 15 tera operations per second (TOPS). Critical processes like path planning and anomaly detection occur locally, with cloud synchronization reserved for non-time-sensitive updates such as software patches or fleet-wide pattern recognition. This architecture not only enhances operational reliability in low-connectivity environments but also mitigates cybersecurity risks by limiting external data exposure.

Cross-Domain Adaptability

The platform's modular design enables rapid deployment across industries without hardware overhauls. Through a patent-pending "skill container" system, domain-specific capabilities can be uploaded via encrypted SD cards. A medical variant might load surgical assistance modules with sub-millimeter motion precision, while an agricultural version could activate crop analysis algorithms and weather-resistant actuators. This plug-and-play functionality has been instrumental in Lingxi's expansion into 12 vertical markets, from precision farming to semiconductor assembly.

Ethical Safeguards and Human Collaboration

Addressing concerns about autonomous systems, Lingxi embeds ethical constraints at the firmware level. A three-tiered validation layer continuously monitors actions against predefined ethical parameters, such as force limits during human-robot collaboration or emergency shutdown protocols for unauthorized operations. In automotive assembly lines, these safeguards enable seamless cooperation between human workers and robots, with the latter automatically reducing speed when sensors detect nearby personnel.

As Lingxi Robotics continues to refine its hybrid AI models, the boundaries between programmed machines and contextual intelligence grow increasingly blurred. With ongoing research in quantum-accelerated pattern matching and bio-inspired neural architectures, the next generation of these systems promises to redefine industrial automation while maintaining the delicate balance between machine autonomy and human oversight.