When working with computer hardware, understanding how memory is organized and calculated remains critical for system optimization. A frequently asked question revolves around "group count" (also called rank or bank grouping) and its role in memory capacity and performance. This article explores the mathematical principles behind memory group calculations while offering practical insights for developers and enthusiasts.

Fundamentals of Memory Architecture

Modern computer memory modules, such as DDR4 or DDR5 DRAM chips, organize data into hierarchical structures. Each memory module comprises multiple "banks," which are further divided into rows and columns. A "group" refers to a logical subdivision of these banks, enabling parallel data access. The total memory capacity depends on three factors: the number of groups, banks per group, and the storage density of individual memory cells.

Group Count Calculation Formula

The formula to calculate total memory capacity is:

Capacity = Groups × Banks/Group × Rows × Columns × Cell Size For example, a DDR4 module with 8 groups, 4 banks per group, 16,384 rows, 1,024 columns, and an 8-bit cell size would have:

8 × 4 × 16,384 × 1,024 × 8 bits = 4,294,967,296 bits ≈ 512 MB This modular approach allows manufacturers to scale capacity efficiently by adjusting group configurations.

Impact on Performance

Group count directly affects latency and bandwidth. More groups enable simultaneous access to different memory sections, reducing contention. However, increasing groups without balancing bus width and clock speed may lead to diminishing returns. For instance, servers handling massive datasets often prioritize higher group counts for parallelism, while gaming PCs might optimize for faster clock rates with fewer groups.

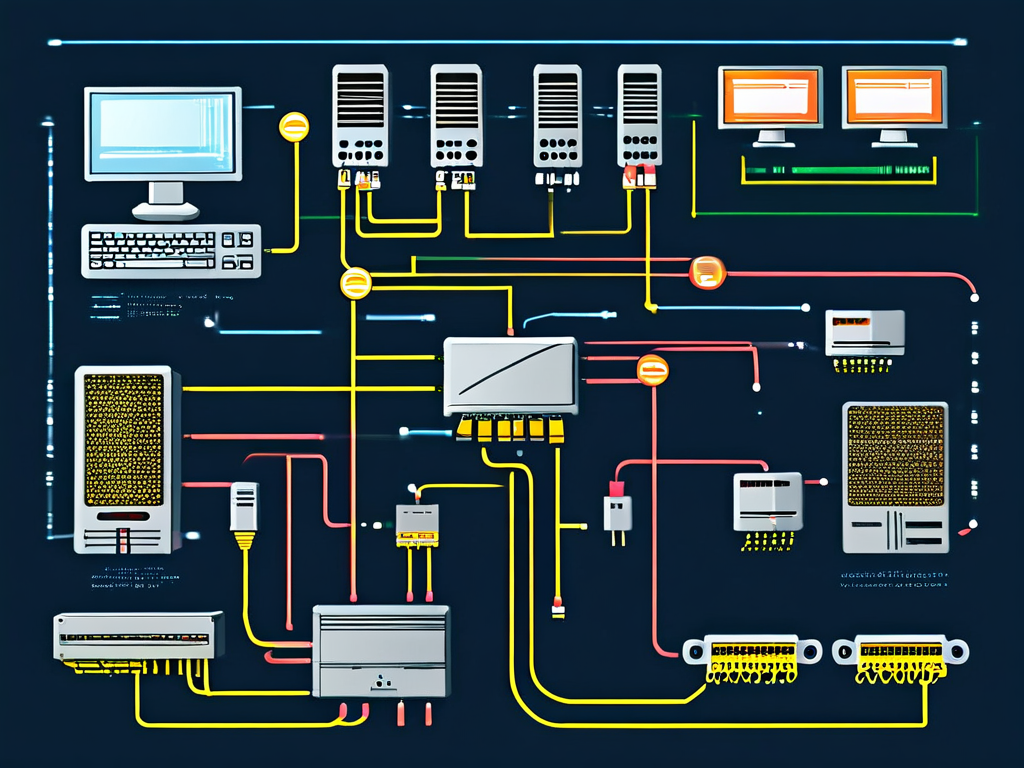

Hardware Implementation

Memory controllers use group addressing schemes to manage data flow. A 64-bit CPU might split a memory request into two 32-bit operations across two groups. Modern BIOS/UEFI interfaces expose settings for adjusting group interleaving—a technique to distribute data across groups for load balancing. Overclockers often tweak these parameters to push hardware limits, though improper configurations can cause instability.

Case Study: Dual-Channel vs. Single-Channel

A practical example of group utilization is dual-channel memory mode. By activating two physical memory groups (channels), the effective bus width doubles from 64-bit to 128-bit. This doesn’t alter total capacity but significantly boosts data transfer rates. Calculations show:

Bandwidth = Clock Speed × Bus Width × Groups At 3,200 MHz, a dual-channel setup delivers:

3.2 GHz × 64 bits × 2 = 409.6 Gb/s compared to 204.8 Gb/s in single-channel mode.

Troubleshooting Group Conflicts

System crashes or BSOD errors sometimes stem from group configuration mismatches. When mixing memory modules with different group counts, the memory controller defaults to the lowest common denominator. For optimal performance, use identical modules and verify group settings via tools like CPU-Z or HWiNFO.

Future Trends

Emerging technologies like 3D-stacked memory and HBM (High Bandwidth Memory) are redefining group architectures. These designs vertically integrate memory groups, drastically reducing latency while increasing density. As AI workloads demand faster memory access, adaptive group allocation algorithms are becoming a key research area.

In summary, mastering group count calculations empowers users to make informed decisions when upgrading or troubleshooting systems. Whether optimizing a workstation for 4K video editing or configuring a server farm, recognizing the interplay between groups, banks, and bus protocols ensures efficient memory utilization.