At the heart of every software application lies a hidden translator – the compiler. This unsung hero bridges the gap between human-readable programming languages and the binary instructions computers understand. Let’s break down compiler principles using everyday analogies while exploring its core phases through practical code snippets.

Imagine writing a letter in French for a friend who only reads English. A compiler acts like a meticulous translator who not only converts words but also ensures the translated message preserves its original meaning. In programming terms, this process transforms high-level code (like Python or Java) into machine code through four key stages: analysis, synthesis, optimization, and output generation.

Phase 1: Lexical Analysis – The Word Detective

The compiler’s first task is scanning source code character by character, much like how our eyes scan a sentence. This phase, called lexical analysis, groups characters into meaningful "tokens." Consider this Python snippet:

x = 42 + y * 3

The lexer identifies tokens:

x(variable)=(operator)42(integer)+(operator)y(variable)*(operator)3(integer)

Modern compilers use finite automata – decision-making flowcharts – to efficiently categorize these tokens. This stage filters out whitespace and comments, focusing only on code essentials.

Phase 2: Syntax Analysis – The Grammar Police

Once tokens are identified, the parser (syntax analyzer) checks structural validity using grammar rules. Think of it as verifying sentence structure in language translation. The parser builds an abstract syntax tree (AST), a hierarchical representation of code structure.

For our example x = 42 + y * 3, the AST ensures operator precedence: multiplication (*) executes before addition (+). Syntax errors like missing semicolons or mismatched parentheses get flagged here.

Phase 3: Semantic Analysis – The Logic Validator

This phase examines meaning rather than structure. Using symbol tables (like a program’s dictionary), the compiler checks variable declarations, data types, and scope validity. In our code snippet, it verifies:

yis declared before use42and3are compatible withy’s data type- The

=operator applies to compatible types

Type mismatch errors ("adding strings to numbers") surface here. The AST gets annotated with semantic information, creating an enhanced "decorated syntax tree."

Phase 4: Intermediate Code Generation – The Universal Translator

Compilers often create platform-agnostic intermediate code (like three-address code) for optimization flexibility. Our example might become:

t1 = y * 3

t2 = 42 + t1

x = t2 This step resembles translating French to Esperanto before converting to English – it creates a standardized representation that’s easier to optimize and adapt for different processors.

Phase 5: Optimization – The Efficiency Expert

The optimizer refines intermediate code without altering functionality. For our example:

- Constant folding: Pre-calculate

2 * 3to6 - Dead code elimination: Remove unused variables

- Loop unrolling: Speed up repetitive operations

Optimization levels vary between compilers. Debug builds often minimize optimizations for easier troubleshooting, while release builds prioritize performance.

Phase 6: Target Code Generation – The Machine Whisperer

The final stage produces architecture-specific machine code. For an x86 processor, our assignment might compile to:

mov eax, [y] imul eax, 3 add eax, 42 mov [x], eax

This phase handles memory allocation, register selection, and instruction scheduling. Modern compilers like GCC or LLVM support multiple targets through modular back-ends.

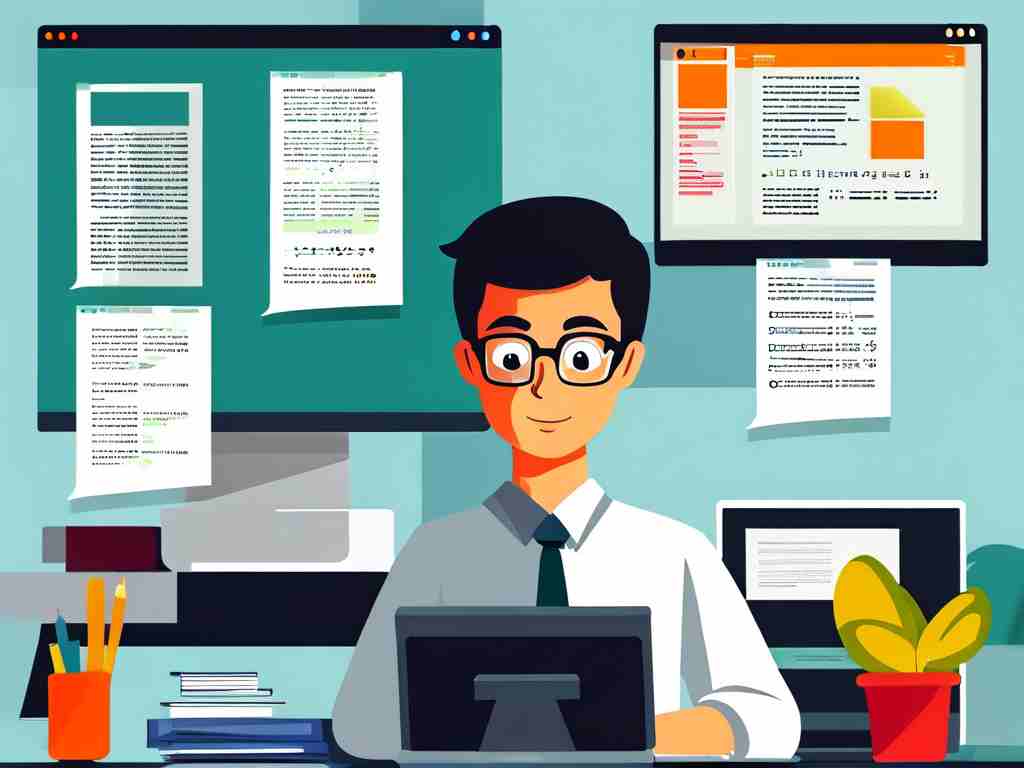

Real-World Compiler Design

Contemporary compilers use layered architectures:

- Front-end: Language-specific (handles C++, Rust, etc.)

- Middle-end: Language-agnostic optimizations

- Back-end: Hardware-specific code generation

The LLVM project exemplifies this modular approach, allowing developers to mix programming languages and target architectures.

Debugging Insights

Understanding compiler phases helps diagnose errors:

- Lexical errors: Misspelled keywords

- Syntax errors: Missing brackets

- Semantic errors: Type mismatches

- Logical errors: Survive compilation but cause runtime issues

Next time you write code, remember the compiler’s multi-stage journey – from parsing your instructions to crafting precise machine conversations. This complex translation process enables developers to focus on solving problems rather than memorizing binary patterns.

To experiment with compiler phases hands-on, try these tools:

- Lex/Yacc for building custom parsers

- Godbolt Compiler Explorer to view assembly output

- Python’s

astmodule for syntax tree visualization

Whether you’re coding in JavaScript or C++, compilers work tirelessly behind the scenes to turn your abstract ideas into executable reality. By understanding these fundamentals, developers can write more efficient code and better troubleshoot mysterious compilation errors.