The integration of robotics into performing arts has reached new heights with the emergence of robotic dance companions. These machines, capable of mirroring human movements or executing choreographed routines autonomously, rely on a sophisticated blend of hardware engineering and algorithmic intelligence. This article delves into the core principles driving this technology while addressing its implementation challenges and future potential.

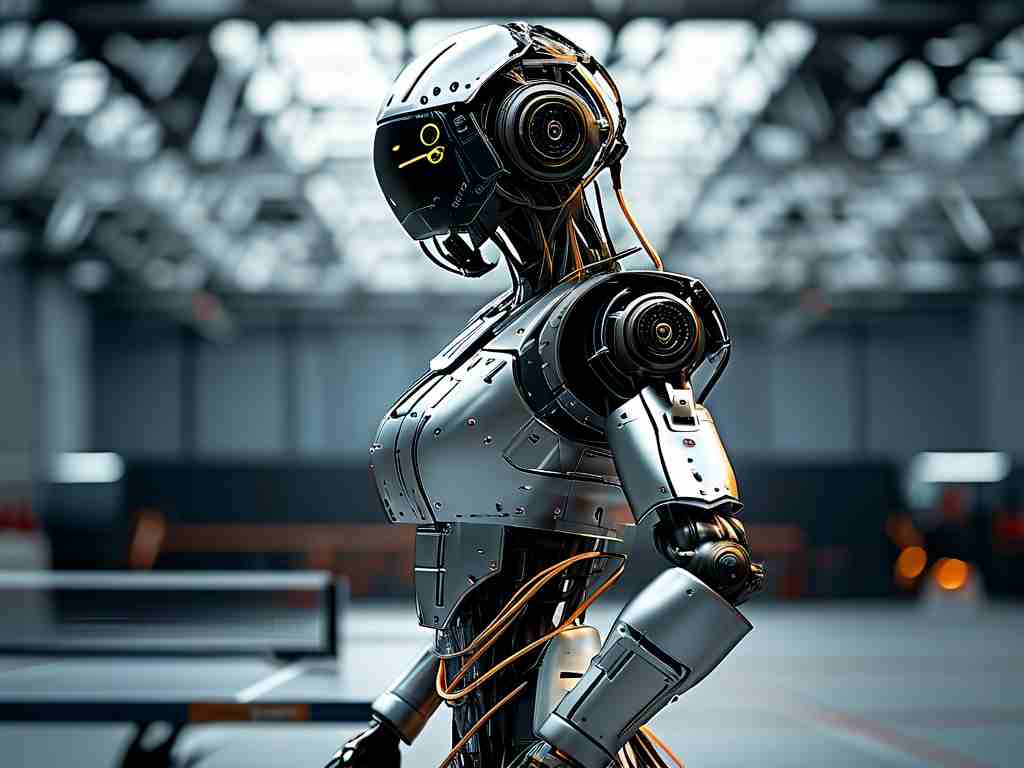

Foundation of Motion Control

At the heart of robotic dance technology lies motion control systems. Servo motors equipped with high-precision encoders enable fluid limb articulation, while hydraulic actuators provide the necessary torque for dynamic movements. Modern systems employ hybrid architectures, combining pre-programmed trajectories with real-time adjustments. For instance, Boston Dynamics' "Atlas" robot demonstrates how inertial measurement units (IMUs) and force-torque sensors enable balance during complex spins and jumps.

Synchronization Protocols

Achieving perfect synchronization between humans and robots requires millisecond-level timing accuracy. Wireless communication protocols like 5G NR (New Radio) facilitate low-latency data exchange, allowing robots to react to a dancer's movements within 3-5ms. Machine learning models trained on motion-capture datasets play a crucial role – recurrent neural networks (RNNs) analyze temporal movement patterns to predict and adapt to human partners. A notable example is Sony's "DanceBot," which uses LiDAR and depth cameras to map its environment 120 times per second.

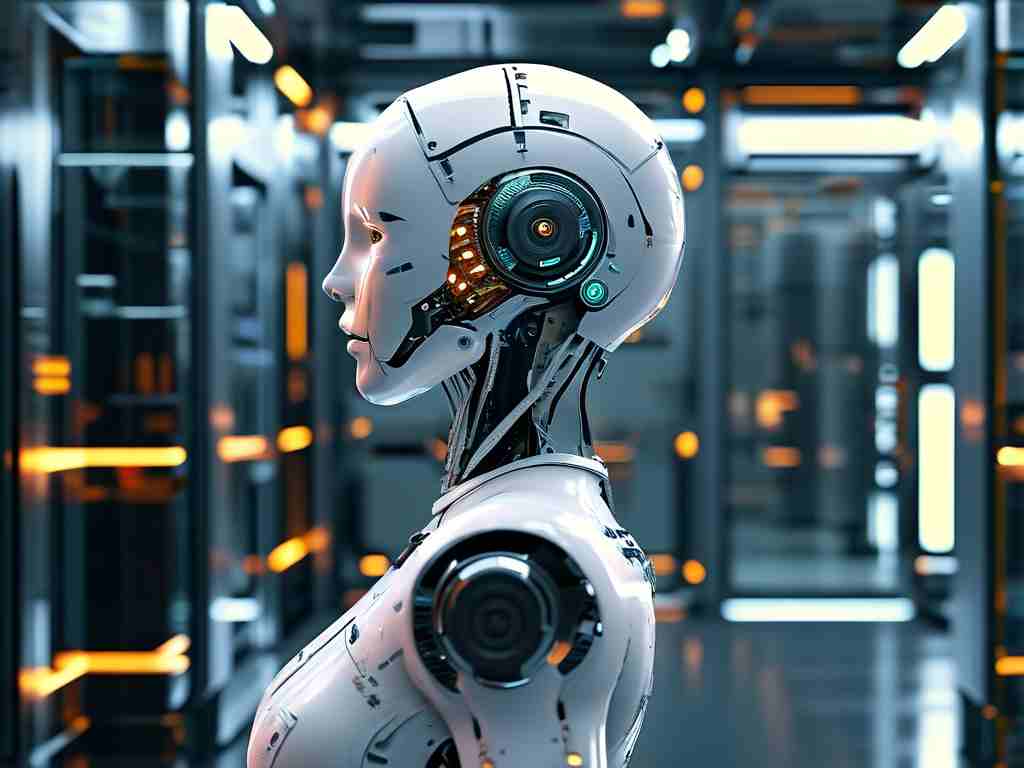

Human-Robot Interaction Design

Safety remains paramount in physical collaboration scenarios. Compliant actuators with variable stiffness mechanisms allow robots to switch between rigid precision and soft responsiveness. Tactile feedback systems using piezoelectric arrays enable robots to "sense" contact pressure, preventing collisions. The EU-funded COMAN+ project showcases how impedance control algorithms let robots adjust their limb resistance when touched unexpectedly during performances.

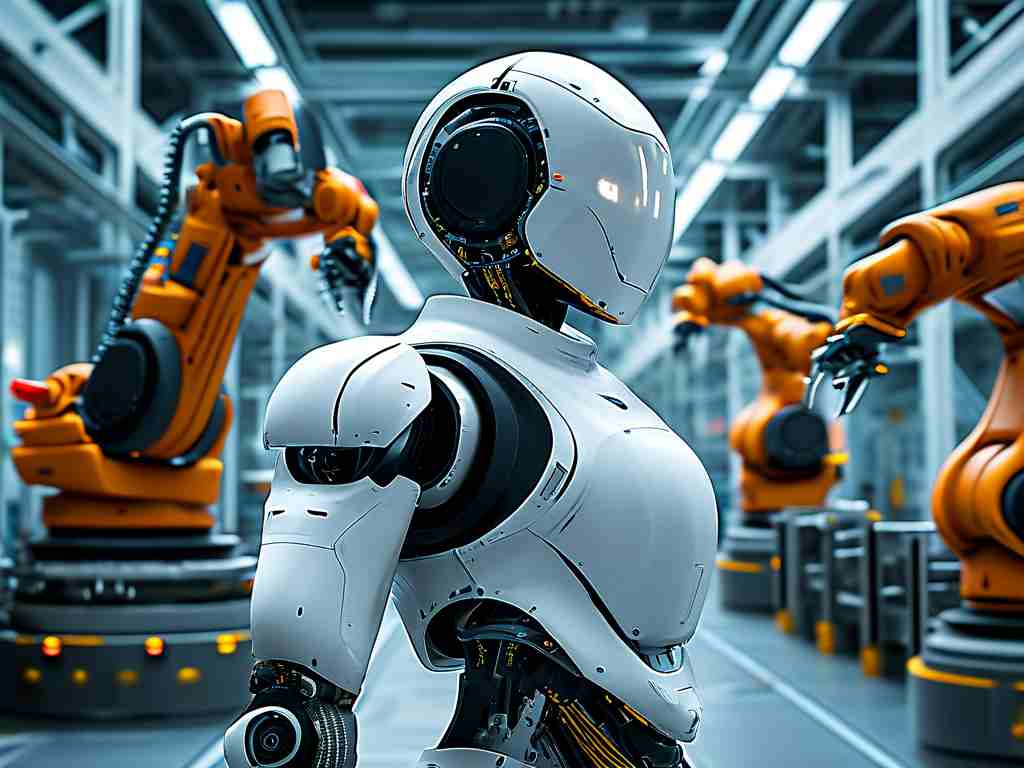

Challenges in Dynamic Environments

Unstructured performance spaces present unique obstacles. Multi-sensor fusion techniques combine visual data (from RGB-D cameras), acoustic cues (beat detection), and spatial awareness (ultrasonic sensors) to maintain coordination. Researchers at ETH Zurich recently demonstrated a quadruped robot that adapts its routine when audience members enter the dance area, using simultaneous localization and mapping (SLAM) technology.

Energy Management Constraints

High-energy maneuvers demand innovative power solutions. Hybrid battery-supercapacitor systems provide burst energy for jumps while maintaining efficiency during slower sequences. MIT's "RoboWaltz" prototype employs regenerative braking in joint motors, recovering 18% of expended energy during downward movements. Thermal management through phase-change materials prevents overheating during extended performances.

Future Directions

Emerging technologies promise to enhance robotic dance capabilities. Quantum inertial sensors could improve motion tracking accuracy by 40%, while neuromorphic computing chips may reduce processing latency to sub-millisecond levels. The integration of emotional AI – like Affectiva's emotion recognition algorithms – could enable robots to interpret and respond to a dancer's expressive cues beyond mere physical movement.

As this field evolves, ethical considerations regarding artistic authorship and human-robot creative collaboration will come to the forefront. Nevertheless, the fusion of robotics and dance continues to push boundaries, offering new possibilities for entertainment, therapeutic applications, and the very definition of performance art.