Memory bit width refers to the total number of data bits a memory system can transfer simultaneously, directly impacting performance in computing devices like PCs and servers. Calculating it involves understanding physical hardware configurations rather than complex formulas. Typically, memory bit width stems from the design of memory modules such as DIMMs and their integrated circuits. For instance, a standard DDR4 module might have a 64-bit width, derived from eight individual DRAM chips each contributing 8 bits. This additive approach means the total bit width equals the number of chips multiplied by the bit width per chip.

Several factors influence this calculation, including the memory type, such as SDRAM or DDR variants, and the motherboard's bus architecture. In dual-channel or quad-channel setups, multiple modules work in parallel, doubling or quadrupling the effective bit width. For example, two 64-bit modules in dual-channel mode yield a 128-bit width, boosting data throughput. Real-world scenarios often require considering the memory controller's capabilities, which coordinate data flow between the CPU and RAM. If the controller supports a wider bus, it can accommodate higher bit widths, enhancing overall system bandwidth.

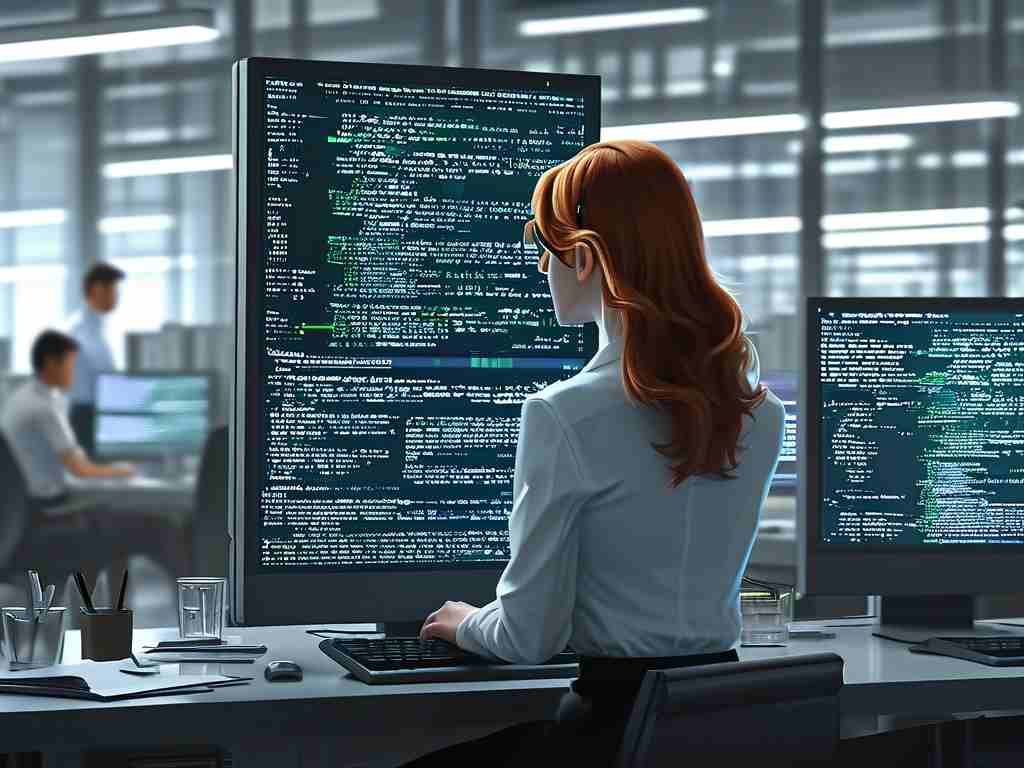

To illustrate, let's examine a practical case. Suppose a system uses DDR5 modules with 64-bit width each. If configured in a quad-channel arrangement with four modules, the total bit width becomes 256 bits. This directly affects bandwidth calculations, where bandwidth (in bytes per second) equals the memory frequency multiplied by the bit width divided by 8. Here's a simple Python code snippet demonstrating this:

def calculate_bandwidth(frequency_hz, bit_width): bandwidth_bytes_per_sec = frequency_hz * bit_width / 8 return bandwidth_bytes_per_sec

result = calculate_bandwidth(3200000000, 256) # Example values: 3.2 GHz frequency and 256-bit width print(f"Bandwidth: {result} bytes/sec")

Beyond basic math, engineers must account for physical constraints like PCB trace layouts and signal integrity. Poor designs can limit bit width, leading to bottlenecks. In modern applications, such as gaming or AI workloads, optimizing bit width ensures efficient data handling, reducing latency. As technology evolves, innovations like HBM stack memory push bit widths higher, but the core calculation principles remain rooted in hardware summation. Ultimately, mastering this concept empowers better system tuning for peak performance.